In the ever-evolving database market, one thing is constant: prices tend to go up over time. Vendors tweak their pricing models, introduce new tiers, and — more often than not — raise costs as their products mature. If you’re a developer, data engineer or architect, understanding these trends can help you make smarter decisions about where and how to store and process data.

Your Favorite Database Just Got More Expensive (Again)

Remember when ClickHouse was the fast, cost-efficient darling of analytics? Well, even it recently bumped up pricing by 30%. (Read more.)

Amazon RDS follows a similar pattern. While EC2 prices remain relatively stable, AWS has increased the margins on RDS over time, meaning managed database services get more expensive while raw compute doesn’t. (See analysis.)

And let’s not forget the industry’s collective PTSD from Oracle’s aggressive pricing tactics. NASA, for example, ended up overpaying Oracle by tens of millions due to confusing and predatory license structures. (Case study.)

Not long ago, PlanetScale dropped its cheap Hobby plan because it wasn’t financially viable. CEO Sam Lambert explained that while it attracted a large number of users, it wasn’t leading to conversions that changed into paying customers at a sustainable rate. He also noted that cloud infrastructure costs, especially egress fees, made the plan economically unfeasible at scale. (Details here.)

This is a pattern across the industry — free tiers and low-cost plans drive adoption but often fail to convert into meaningful revenue unless carefully structured.

Autoscaling: Convenient, but Does Budget Autoscale, Too?

Many modern database vendors now offer usage-based pricing models that automatically scale with demand. While this flexibility is great, it can also lead to unexpected costs.

- BigQuery cost pitfalls: BigQuery’s $5 per TB scanned sounds cheap — until you realize that querying a large data set without proper cost controls can lead to shockingly high bills. In one notable case, an HTTP Archive user attempting to query a large data set accumulated a $14,000 charge overnight after forgetting to apply limits on the scan size. (Read more.)

- Transactional databases like Supabase and Neon: These services scale to zero, allowing for cost savings on idle workloads. However, once workloads ramp up, pricing jumps quickly. For example, Neon’s lowest-paid tier starts at $0, but a scale plan costs between $69/month to $870/month depending how much compute you use. (Neon pricing.)

- Analytics databases like Snowflake, Databricks, ClickHouse and BigQuery: Compute costs dominate. Snowflake, for example, charges approximately $2 per credit, with warehouses scaling up costs significantly when running complex queries. A single unoptimized query can cost hundreds or even thousands of dollars.

Autoscaling isn’t inherently bad — it’s a powerful tool when combined with the right cost controls. The real issue is when platforms prioritize flexibility without giving users enough visibility or safeguards to prevent runaway bills.

Another case of autoscaling-induced sticker shock comes from serverless databases. While marketed as cost-effective and highly flexible, many serverless offerings — like DynamoDB’s on-demand mode — can become extremely expensive compared to provisioned alternatives.

DynamoDB on-demand charges per request, making it roughly five to seven times more expensive than provisioned capacity for high-traffic applications. On-demand writes, for instance, are billed at $1.25 per million requests, whereas provisioned writes can cost as little as $0.18 per million. (See AWS pricing.)

While this model makes sense for unpredictable workloads, applications with steady traffic patterns can rack up significant costs unnecessarily. Opting for provisioned capacity with auto-scaling safeguards is often a better alternative for keeping costs in check.

Taming Autoscaling: Lessons From Different Approaches

Not all autoscaling implementations are the same. Some vendors allow for greater cost predictability by designing their architecture around storage-first principles rather than compute-heavy processing.

For example, Hydrolix, a fork of ClickHouse, focuses on keeping compute costs in check by optimizing query execution and tightly integrating with cost-effective cloud object storage like AWS S3. Instead of constantly spinning up expensive compute resources, it minimizes the need for on-demand scaling through better indexing and efficient query filtering.

The Hidden Toll: Data Transfer Fees and Vendor Lock-In

Compute and autoscaling costs tend to grab attention, but data transfer fees are another major source of surprise expenses. Moving data between regions, services or out of a cloud provider’s network can quietly rack up costs in ways that aren’t always obvious upfront.

- Egress fees add up fast: AWS provides 100 GB of free data transfer out to the internet per month; beyond this, charges apply. For example, transferring data out of AWS to the internet is billed at $0.09 per GB for most regions. Regularly moving large data sets can thus result in significant costs.

- Inter-region transfers are not free: Even staying within the same cloud provider doesn’t always protect you. AWS charges $0.02 per GB for inter-region transfers, meaning cross-region replication or analytics pipelines that span multiple regions can silently eat into your budget.

- Vendors profit from lock-in: Once you start accumulating petabytes of data inside a cloud ecosystem, data transfer costs act as a deterrent to switching providers. The more you move, the more you pay, creating an expensive form of vendor lock-in that few organizations consider early enough.

There have been notable incidents where organizations faced unexpected and substantial charges due to data transfer misconfigurations. For example, a misconfigured AWS S3 bucket exposed sensitive customer data, resulting in a massive data breach. The company responsible for the misconfiguration had to cover the costs of the breach and incurred a bill of $2.3 million for the data transfer and storage fees.

How to Keep Data Transfer Costs in Check:

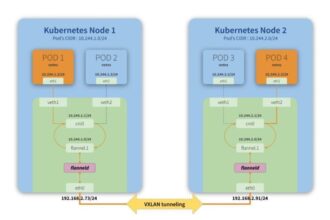

- Optimize data movement: Design your architecture to minimize unnecessary data transfers. Keep data processing and storage within the same region to avoid inter-region fees.

- Use cloud cost monitoring tools: AWS, Google Cloud and Microsoft Azure offer tools to track data transfer costs. Setting up alerts can help prevent budget blowouts before they happen. (Check out NetApp BlueXP for an in-depth analysis.)

- Batch and compress data: Instead of streaming data continuously, batch transfers and use compression to cut down on the amount of data being moved.

Cloud providers don’t make data transfer fees an obvious line item in pricing models, but ignoring them can be a costly mistake. Smart architecture decisions and proactive monitoring go a long way in avoiding unnecessary expenses.

Storage vs. Compute Costs: What Really Drives Your Bill?

Historically, database vendors have employed various pricing models. In the 1980s, Oracle introduced a per-server licensing model, allowing unlimited users to access the database on a single server. As multicore processors became prevalent, licensing models evolved to account for the increased processing power, leading to per-core pricing structures.

Today, this approach remains prevalent among major data platforms such as Snowflake, BigQuery and Databricks:

- Storage is affordable: Platforms that decouple storage and compute, such as Snowflake, BigQuery and Databricks, generally charge near the raw cloud storage prices, typically ranging from $20 to $40 per terabyte (TB) per month. However, vendors with coupled storage and compute models can impose higher costs, especially for large data sets that require minimal compute resources (See Google Cloud BigQuery storage pricing.)

- Compute is the profit driver: Cloud data vendors capitalize on processing power. For instance, Snowflake and Databricks charge based on “credits” or “DBUs” (Databricks Units), while BigQuery charges by the amount of data scanned, at $5 per TB. The costs for compute can rapidly outpace storage, especially for unoptimized workloads. (Databricks pricing, Snowflake pricing)

- Databricks vs. Snowflake compute costs:

- Databricks: Databricks uses Databricks Units (DBUs) to measure processing capability per unit of time. The cost per DBU varies based on the type of workload, ranging from approximately $0.07 for lightweight jobs to $0.95 for interactive analytics. For instance, running a job that consumes 10 DBUs for an hour would cost between $0.70 and $9.50, depending on the workload category. Spot instances and reserved capacity can provide savings, but without optimization, costs can spiral. (See Databricks pricing.)

- Snowflake: Compute costs are based on virtual warehouses, starting at about $2/hour for an X-Small instance. Costs scale exponentially with warehouse size. Choosing the right warehouse size, using auto-suspend features and avoiding over-provisioning are key to keeping Snowflake costs under control. (See Snowflake Optimization Tips.)

- BigQuery: A different approach to compute pricing: Google BigQuery offers a $2,000/month flat-rate plan for 100 slots ( approximately $20 per slot per month). This benefits organizations with steady query loads but can be inefficient for variable workloads. Cost-conscious users must monitor query patterns and optimize data structures to minimize processing costs. (See BigQuery pricing.)

If you don’t optimize queries and workloads, costs can skyrocket. Snowflake’s margins, for example, are primarily from compute consumption, not storage.

Finding Cost-Effective Database Solutions

Given the ever-increasing costs of analytics and transactional workloads, companies need to explore alternatives that provide the same functionality without breaking the bank. One major cost driver is Elasticsearch, which many organizations rely on for log and search analytics, but it can become prohibitively expensive at scale.

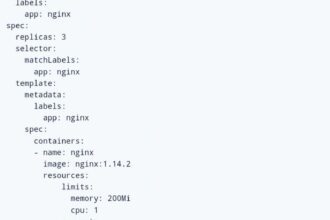

This is where Quesma comes in. For example, if you’re struggling with Elasticsearch’s rising costs, Quesma provides an alternative approach with its database proxy. By seamlessly translating Elastic queries into SQL, it allows organizations to leverage more cost-efficient database backends, including ClickHouse and Hydrolix. This means you can retain the flexibility of Elasticsearch while significantly reducing infrastructure expenses.

Pick Your Vendor or Your Vendor Picks Your Wallet

The shift to usage-based pricing means you need to be strategic. The key takeaways:

- Expect price hikes as vendors mature: What’s cheap today may not be tomorrow.

- Autoscaling pricing is powerful but dangerous: Set safeguards to avoid surprise bills.

- Compute is the real cost: Storage is cheap, but processing data is where vendors make their money.

- Data transfer fees add up quickly: Minimize unnecessary data movement to keep costs under control.

- Pick the right vendor for your workload: Instead of throwing away data, optimize how and where you store and process it.

Choosing the right database isn’t just about performance, it’s about making sure your pricing model works for you, not against you.

The post Trends in Modern Database Pricing: What You Need to Know appeared first on The New Stack.