Ambassador post originally published on Gerald on IT by Gerald Venzl

In this guide, I’ll cover how to run a production-ready Raspberry Pi Kubernetes Cluster using K3s.

Background

If you are like me, you probably have a bunch of (older) Raspberry Pi models lying around not doing much because you replaced them with newer models. So, instead of just having them collect dust, why not create your own little Kubernetes cluster and deploy something on them, or just use it to learn Kubernetes?

Setup

Hardware

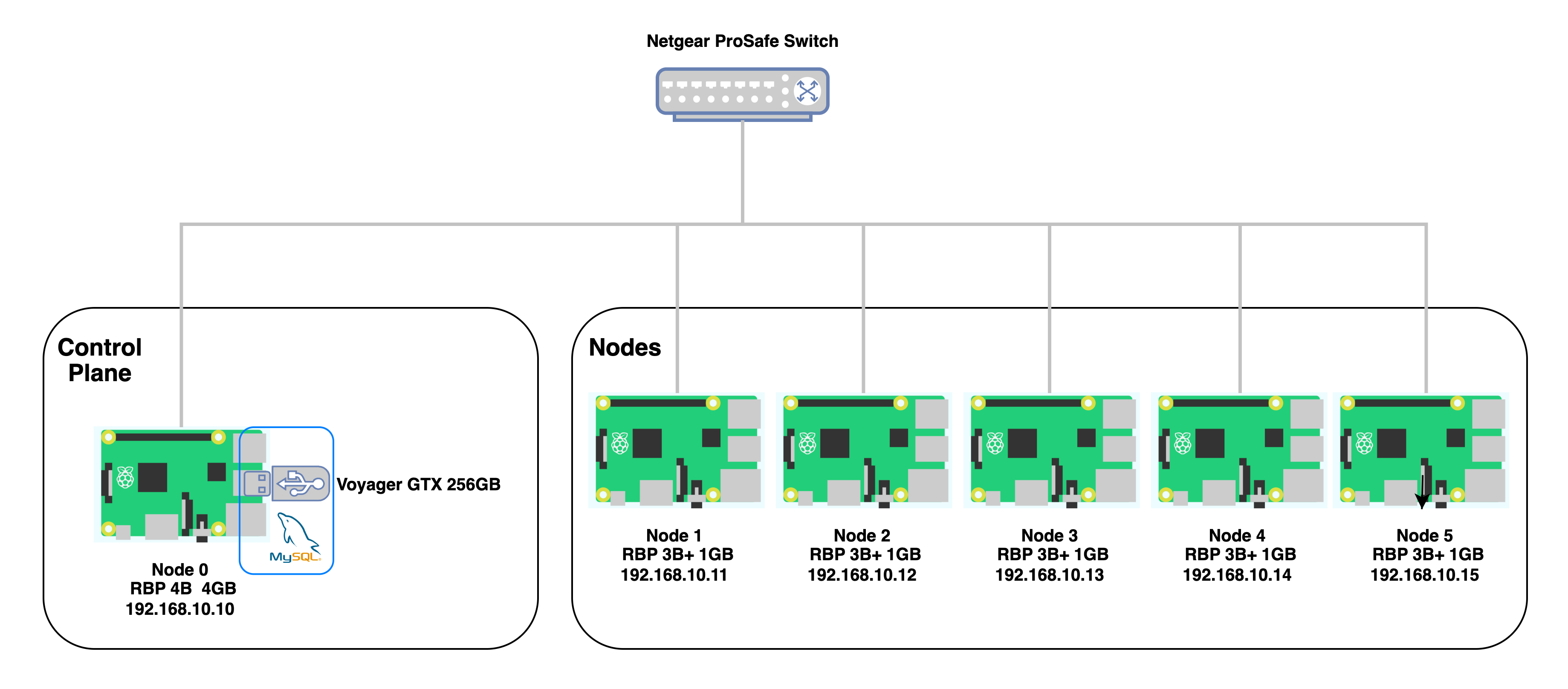

- 1 x Raspberry Pi Model 4 B with 4 GB RAM

- 1 x Raspberry Pi Model 4 B with 2 GB RAM

- 4 x Raspberry Pi Model 3 B+ with 1 GB of RAM

- 1 x Netgear ProSafe 8 Port Gigabit unmanaged switch

- 1 x Corsair Flash Voyager GTX 256GB USB 3.1 Premium Flash Drive

Note: This hardware setup is what I have available. At no point is this the recommendation for building your own cluster. If you have newer, more powerful Raspberry Pi models, you are probably better off using them instead.

Software

All Raspberry Pis are running Raspberry Pi OS (with desktop)

- Release date: November 19th 2024

- System: 64-bit

- Kernel version: 6.6

- Debian version: 12 (bookworm)

Why K3s?

K3s is a lightweight, CNCF-certified, and fully compliant Kubernetes distribution. It ships as a single binary, requires half the memory, supports other data stores, and more. As their website says, it’s:

Great for:

- Edge

- Homelab

- Internet of Things (IoT)

- Continuous Integration (CI)

- Development

- Single board computers (ARM)

- Air-gapped environments

- Embedded K8s

- Situations where a PhD in K8s clusterology is infeasible

Another advantage of K3s for Raspberry Pis is that it allows for data stores other than etcd. That’s great because, as their website says, etcd is write-intensive and the SD cards can usually not handle the IO load:

K3s performance depends on the performance of the database. To ensure optimal speed, we recommend using an SSD when possible.

If deploying K3s on a Raspberry Pi or other ARM devices, it is recommended that you use an external SSD. etcd is write intensive; SD cards and eMMC cannot handle the IO load.

In my case, I am using an external MariaDB database running on the Corsair Flash Voyager GTX 256GB USB 3.1 Premium Flash Drive. For comparison, the SanDisk 256GB Extreme microSDXC UHS-I card offers a write rate of 130MB/s and a read rate of 190MB/s, while the Voyager GTX USB 3.1 provides a read and write rate of 440MB/s. However, it comes at 2.5 times the price of a microSD card.

Installation

cgroups

Kubernetes requires the cgroups (control groups) Linux kernel feature. Unfortunately, the memory subsystem of this feature is not enabled by default in the latest Raspberry Pi OS image. To verify whether it is, you can do a cat /proc/cgroups and see whether there is a 1 in the enabled column for the memory row:

gvenzl@gvenzl-rbp-0:~ $ cat /proc/cgroups

#subsys_name hierarchy num_cgroups enabled

cpuset 0 58 1

cpu 0 58 1

cpuacct 0 58 1

blkio 0 58 1

memory 0 58 0

devices 0 58 1

freezer 0 58 1

net_cls 0 58 1

perf_event 0 58 1

net_prio 0 58 1

pids 0 58 1If you see a 0 like in the output above, you have to enable the memory subsystem. This is done by adding cgroup_enable=memory to the /boot/firmware/cmdline.txt file and then reboot the system. The quickest way to do this is via these commands (note: sudo reboot will reboot your Raspberry Pi):

sudo sh -c 'echo " cgroup_enable=memory" >> /boot/firmware/cmdNote: cgroup_enable=cpuset and cgroup_memory=1 are no longer required.

Once the system is up again, doublecheck the entry for the memory subsystem, which should now show a 1:

gvenzl@gvenzl-rbp-0:~ $ cat /proc/cgroups

#subsys_name hierarchy num_cgroups enabled

cpuset 0 94 1

cpu 0 94 1

cpuacct 0 94 1

blkio 0 94 1

memory 0 94 1

devices 0 94 1

freezer 0 94 1

net_cls 0 94 1

perf_event 0 94 1

net_prio 0 94 1

pids 0 94 1Repeat the above step on every Raspberry Pi before continuing.

Static IP address configuration

Static IP addresses make things easy for cluster management and communication. It ensures that devices always have the same IP address, which makes it easier to identify a given node and prevent communication disruption between nodes due to changing IP addresses. The latest Raspberry Pi OS has a new NetworkManager and associated command line utilities. For an interactive, text-based UI, use the nmtui (network manager text user interface) command. For scripting purposes, you can use the nmcli (network manager command line interface) to assign static IP addresses for the Raspberry Pis. You should find an already preconfigured Wired connection 1 on the ethernet device eth0. You can verify that via nmcli con show:

gvenzl@gvenzl-rbp-0:~ $ nmcli con show

NAME UUID TYPE DEVICE

preconfigured 999a68d9-b3e1-4437-bf86-1c9a5f775159 wifi wlan0

lo db18dd9c-94fe-4fac-8c66-d6ddc3406900 loopback lo

Wired connection 1 68f30a89-ef57-3ee9-8238-9310f0829f21 ethernet eth0To change the configuration for the ethernet connection to have a static IP address, use sudo nmcli con mod. The NN reflects the digits you want to use for the Raspberry Pi. In my case, it’s going to be 10, 11, 12, 13, 14, and 15 on the given node:

sudo nmcli c mod "Wired connection 1" ipv4.addresses "192.168.0.NN/24" ipv4.method manualIf you also want to set a Gateway address to reach the outside network and/or internet and DNS entries for name resolution, you can do so with the following commands:

sudo nmcli con mod "Wired connection 1" ipv4.gateway 192.168.0.1

sudo nmcli con mod "Wired connection 1" ipv4.dns "192.168.0.1, 1.1.1.1, 8.8.8.8"Note: In my case, I will reach the outside world via the WiFi connection. The ethernet connection is purely for cluster communication

Repeat the above step on every Raspberry Pi before continuing.

Installing K3s

The simplest way to install K3s is by running curl -sfL https://get.k3s.io | sh -. However, because I’m using an external MariaDB database as the cluster data store, things are a bit different. Instead of using etcd, the installation needs to connect to the MariaDB database. This can be done by supplying the --datastore-endpoint parameter or K3S_DATASTORE_ENDPOINT environment variable during the installation. For more details, see Cluster Datastore in the K3s documentation.

Creating the MariaDB database and user for Kubernetes

K3s is capable of connecting to the MariaDB socket at /var/run/mysqld/mysqld.sock using the root user if just mysql:// is provided as the datastore-endpoint. That means that the database needs to run on the same host as the control plane and socket connectivity for root has to be enabled in the MariaDB configuration. Alternatively, one can create a user and database manually, which is what I will do. The user and database will both be called kubernetes. Here are the four SQL statements you will need for that:

CREATE DATABASE kubernetes;

CREATE USER 'kubernetes'@'<your IP address range>' IDENTIFIED BY '<your password>';

GRANT ALL PRIVILEGES ON kubernetes.* TO 'kubernetes'@'<your IP address range>';

FLUSH PRIVILEGES;

gvenzl@gvenzl-rbp-0:~ $ sudo mysql

Welcome to the MariaDB monitor. Commands end with ; or g.

Your MariaDB connection id is 36

Server version: 10.11.6-MariaDB-0+deb12u1 Debian 12

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE kubernetes;

Query OK, 1 row affected (0.001 sec)

MariaDB [(none)]> CREATE USER 'kubernetes'@'192.168.10.%' IDENTIFIED BY '*********';

Query OK, 0 rows affected (0.005 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON kubernetes.* TO 'kubernetes'@'192.168.10.%';

Query OK, 0 rows affected (0.002 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.002 sec)

MariaDB [(none)]> exit;

Bye

gvenzl@gvenzl-rbp-0:~ $Running the K3s setup script on the control plane Raspberry Pi

To start the K3s installation, a slightly different variation from the above setup script needs to be run to include the --datastore-endpoint parameter:

curl -sfL https://get.k3s.io | sh -s - --datastore-endpoint mysql://<username>:<password>@tcp(<hostname>:3306)/<database-name>In my case, this is going to look like this:

curl -sfL https://get.k3s.io | sh -s - --datastore-endpoint "mysql://kubernetes:*******@tcp(192.168.10.10:3306)/kubernetes"

gvenzl@gvenzl-rbp-0:~ $ curl -sfL https://get.k3s.io | sh -s - --datastore-endpoint "mysql://kubernetes:*********@tcp(192.168.10.10:3306)/kubernetes"

[INFO] Finding release for channel stable

[INFO] Using v1.30.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.30.6+k3s1/sha256sum-arm64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.30.6+k3s1/k3s-arm64

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Finding available k3s-selinux versions

sh: 416: [: k3s-selinux-1.6-1.el9.noarch.rpm: unexpected operator

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] Host iptables-save/iptables-restore tools not found

[INFO] Host ip6tables-save/ip6tables-restore tools not found

[INFO] systemd: Starting k3s

gvenzl@gvenzl-rbp-0:~ $Once the script has finished, verify the control plane setup via sudo kubectl get nodes:

gvenzl@gvenzl-rbp-0:~ $ sudo kubectl get nodes

NAME STATUS ROLES AGE VERSION

gvenzl-rbp-0 Ready control-plane,master 2m48s v1.30.6+k3s1Adding nodes to the cluster

To add the additional Pis to the cluster, you must first retrieve the cluster token in the /var/lib/rancher/k3s/server/token file, which is needed for the agent installation. You can do that via the following command:

sudo cat /var/lib/rancher/k3s/server/tokenAnd will get a token that looks something like this:

gvenzl@gvenzl-rbp-0:~ $ sudo cat /var/lib/rancher/k3s/server/token

K103bf5abb471fc2f7bcda85fa95a60c0f934a22a858c6ae943f4d7e0ee4091bc11::server:f3d376e3274a174a38b3b97d224aac6dOnce you have retrieved the token, connect to the other Raspberry Pis and execute the following command:

curl -sfL https://get.k3s.io | K3S_URL=https://<control plane node IP>:6443 K3S_TOKEN=<server token> sh -For example:

curl -sfL https://get.k3s.io | K3S_URL=https://192.168.10.10:6443 K3S_TOKEN=K103bf5abb471fc2f7bcda85fa95a60c0f934a22a858c6ae943f4d7e0ee4091bc11::server:f3d376e3274a174a38b3b97d224aac6d sh -

gvenzl@gvenzl-rbp-1:~ $ curl -sfL https://get.k3s.io | K3S_URL=https://192.168.10.10:6443 K3S_TOKEN=K103bf5abb471fc2f7bcda85fa95a60c0f934a22a858c6ae943f4d7e0ee4091bc11::server:f3d376e3274a174a38b3b97d224aac6d sh -

[INFO] Finding release for channel stable

[INFO] Using v1.30.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.30.6+k3s1/sha256sum-arm64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.30.6+k3s1/k3s-arm64

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Finding available k3s-selinux versions

sh: 416: [: k3s-selinux-1.6-1.el9.noarch.rpm: unexpected operator

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] Host iptables-save/iptables-restore tools not found

[INFO] Host ip6tables-save/ip6tables-restore tools not found

[INFO] systemd: Starting k3s-agentOnce the installation has finished on all nodes, you have your K3s cluster up and running. You can verify that by running sudo kubectl get nodes on the control plane one more time:

gvenzl@gvenzl-rbp-0:~ $ sudo kubectl get nodes

NAME STATUS ROLES AGE VERSION

gvenzl-rbp-0 Ready control-plane,master 16m v1.30.6+k3s1

gvenzl-rbp-1 Ready <none> 5m42s v1.30.6+k3s1

gvenzl-rbp-2 Ready <none> 3m14s v1.30.6+k3s1

gvenzl-rbp-3 Ready <none> 2m36s v1.30.6+k3s1

gvenzl-rbp-4 Ready <none> 2m7s v1.30.6+k3s1

gvenzl-rbp-5 Ready <none> 42s v1.30.6+k3s1

gvenzl@gvenzl-rbp-0:~ $Congratulations, you now have a K3s cluster ready for action!

Bonus: Access your cluster from the Outside with kubectl

If you want to access the cluster from, e.g., your local MacBook with kubectl, you will need to save a copy of the /etc/rancher/k3s/k3s.yaml file locally as ~/.kube/config (the k3s.yaml file needs to be called config) and replace the value of the server field with the IP address or name of the K3s server.

kubectl itself can be installed via Homebrew:

gvenzl@gvenzl-mac ~ % brew install kubectl

==> Downloading https://ghcr.io/v2/homebrew/core/kubernetes-cli/manifests/1.31.3

Already downloaded: /Users/gvenzl/Library/Caches/Homebrew/downloads/f8fd19d10e239038f339af3c9b47978cb154932f089fbf6b7d67ea223df378de--kubernetes-cli-1.31.3.bottle_manifest.json

==> Fetching kubernetes-cli

==> Downloading https://ghcr.io/v2/homebrew/core/kubernetes-cli/blobs/sha256:fd154ae205719c58f90bdb2a51c63e428c3bf941013557908ccd322d7488fb67

Already downloaded: /Users/gvenzl/Library/Caches/Homebrew/downloads/ec1af5c100c16e5e4dc51cff36ce98eb1e257a312ce5a501fae7a07724e59bf9--kubernetes-cli--1.31.3.sonoma.bottle.tar.gz

==> Pouring kubernetes-cli--1.31.3.sonoma.bottle.tar.gz

==> Caveats

zsh completions have been installed to:

/usr/local/share/zsh/site-functions

==> Summary

🍺 /usr/local/Cellar/kubernetes-cli/1.31.3: 237 files, 61.3MB

==> Running `brew cleanup kubernetes-cli`...

Disable this behaviour by setting HOMEBREW_NO_INSTALL_CLEANUP.

Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).Next, create the ~/.kube folder and save a copy of k3s.yaml as config:

gvenzl@gvenzl-mac ~ % mkdir ~/.kube

gvenzl@gvenzl-mac ~ % scp root@gvenzl-rbp-0:k3s.yaml ~/.kube/config

root@gvenzl-rbp-0's password:

k3s.yaml 100% 2965 221.2KB/s 00:00And replace the server parameter with the IP address or hostname of your control plane node:

gvenzl@gvenzl-mac ~ % sed -i '' 's|server: .*|server: https://gvenzl-rbp-0:6443|g' ~/.kube/configOnce you have done that, you can control your cluster locally too:

gvenzl@gvenzl-mac ~ % kubectl get nodes

NAME STATUS ROLES AGE VERSION

gvenzl-rbp-0 Ready control-plane,master 39m v1.30.6+k3s1

gvenzl-rbp-1 Ready <none> 28m v1.30.6+k3s1

gvenzl-rbp-2 Ready <none> 25m v1.30.6+k3s1

gvenzl-rbp-3 Ready <none> 25m v1.30.6+k3s1

gvenzl-rbp-4 Ready <none> 24m v1.30.6+k3s1

gvenzl-rbp-5 Ready <none> 23m v1.30.6+k3s1