“Oh, yes,” a head of platform said sheepishly, “we used to have integration tests, but eventually no one wanted to maintain them, and they mostly started to fail. So we had to turn them off.”

What started with the best intentions — to catch breaking changes early — became a maintenance burden as services evolved. Schemas kept changing, tests kept breaking and fixing them felt like a losing battle. This is a familiar story for teams running microservices: Integration tests, built with high hopes, often become unreliable, leading teams to either overcommit to maintenance or abandon them altogether.

Enter Shadow Testing

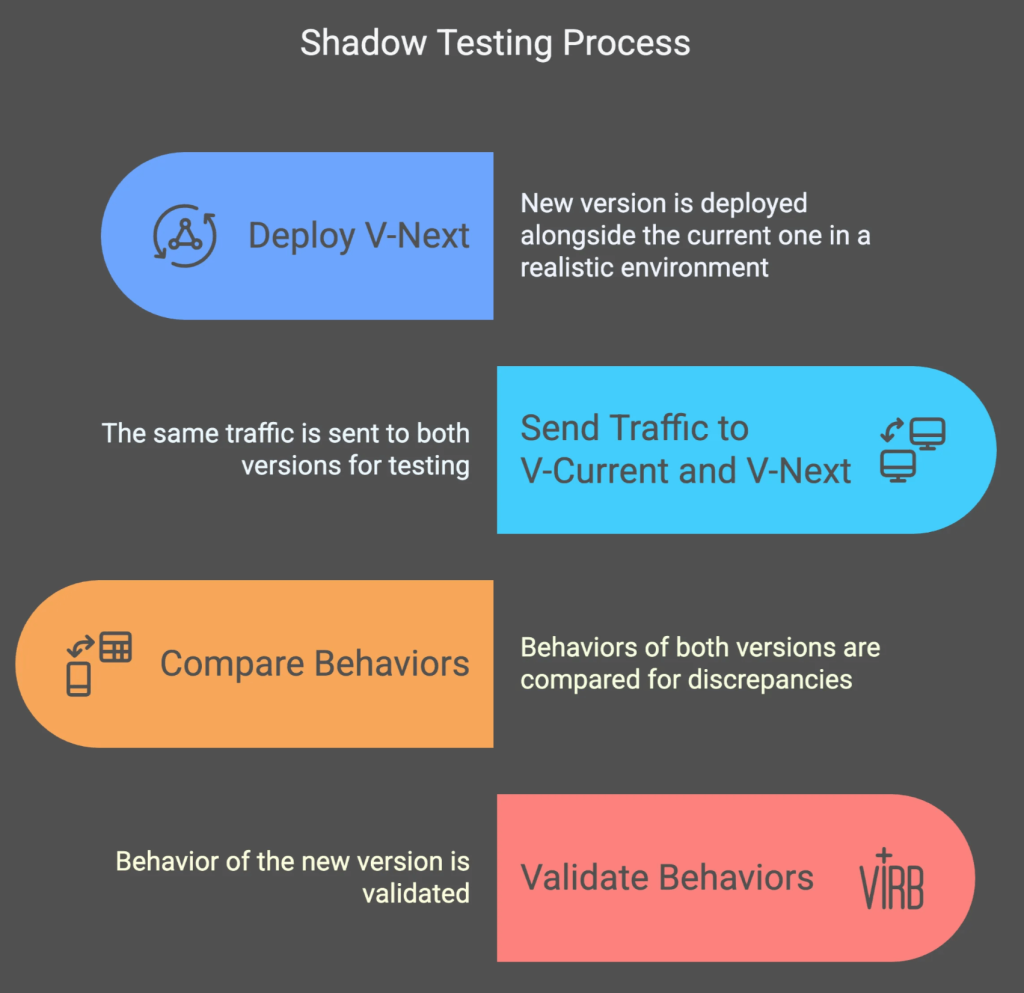

Shadow testing offers a fundamentally different approach to ensuring safe deployments. Instead of relying solely on mocks, stubs or brittle integration tests, shadow testing runs new service versions alongside the current one, processing the same traffic for direct comparison. This allows organizations to validate real-world behavior without affecting users. Unlike traditional preproduction tests, shadow testing:

- Runs test versions alongside the current version.

- Involves sending the same traffic to both the test version and the stable version for direct comparison.

- Requires minimal manual maintenance compared to traditional test setups.

However, we should mention that the traffic doesn’t necessarily have to mean production traffic — it can include synthetically crafted requests that closely mimic production traffic. The closer this representative traffic is to real-world conditions, the more valuable the test results, of course.

As noted in Microsoft’s Engineering Fundamentals Playbook, “Shadow testing reduces risks when you consider replacing the current environment (V-Current) with candidate environment with new feature (V-Next). This approach is monitoring and capturing differences between two environments then compares and reduces all risks before you introduce a new feature/release.” This ability to safely test under realistic conditions makes shadow testing an invaluable strategy for modern software teams.

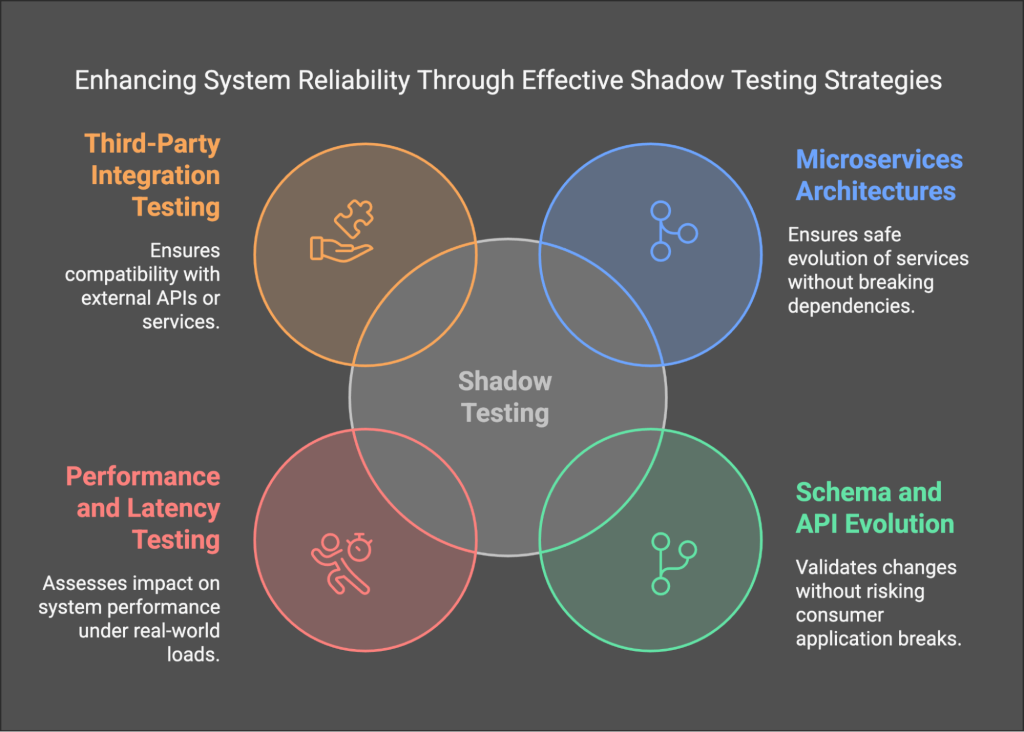

Where Shadow Testing Shines

Shadow testing is especially useful for microservices with frequent deployments, helping services evolve without breaking dependencies. It validates schema and API changes early, reducing risk before consumer impact. It also assesses performance under real conditions and ensures proper compatibility with third-party services.

Let us now compare it to other forms of testing that are familiar to most engineering teams:

| Testing Approach | Strengths | Weaknesses |

| Unit tests | Fast, reliable, focused on small units of functionality | Limited scope, doesn’t cover integrations |

| Integration tests (mocks) | Simulates dependencies, isolates failures | Brittle, often outdated mocks |

| End-to-end tests | Covers critical flows from a business perspective | Expensive, flaky, hard to maintain |

| Shadow testing | Uses real dependencies, minimal maintenance, detects regressions | Requires infrastructure setup, potential security hurdles if using production traffic |

Now, you might ask: “Isn’t this just like canarying or feature flagging?” Not quite. While all three manage risk in deployments, they serve different roles:

- Canary releases — Gradually roll out changes to a small subset of users to monitor for issues, but failures still affect real users.

- Feature flags — Enable or disable functionality dynamically, ideal for controlled rollouts but not for testing changes early. They are often a late stage in the software development life cycle and too cumbersome for small features.

- Shadow testing — Runs a new version in parallel with traffic, validating backend changes safely. It’s a shift-left approach, catching regressions and performance issues early, reducing late-stage surprises.

Shadow testing doesn’t replace traditional testing but rather complements it by reducing reliance on fragile integration tests. While unit tests remain essential for validating logic and end-to-end tests catch high-level failures, shadow testing fills the gap of real-world validation without disrupting users.

How Shadow Testing Works (Technical Overview)

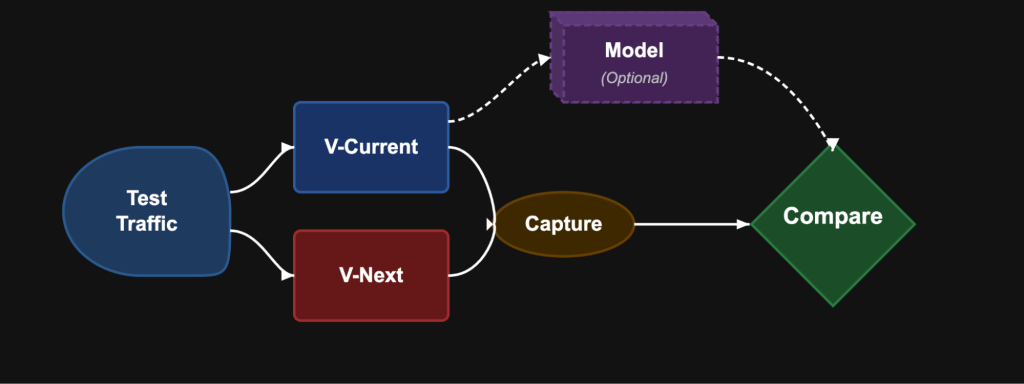

Shadow testing follows a common pattern regardless of environment and has been implemented by tools like Diffy from Twitter/X, which introduced automated-response comparisons to detect discrepancies effectively.

Some of the key aspects of such tools are:

- Traffic execution — The test version (V-Next) runs alongside the current version, processing the same traffic as the stable version to identify discrepancies. This ensures a direct comparison of behaviors, helping detect regressions and inconsistencies more effectively.

- Response comparison — Outputs are logged and compared to detect regressions or unexpected behavior. An optional model component keeps track of general patterns and helps interpret differences by classifying what is important and what is not, improving the relevance and accuracy of comparisons.

- Observability and metrics — Performance and error patterns are analyzed to ensure stability before full deployment.

The environment where shadow testing is performed may vary, providing different benefits. More realistic environments are obviously better:

- Staging shadow testing — Easier to set up, avoids compliance and data isolation issues, and can use synthetic or anonymized production traffic to validate changes safely.

- Production shadow testing — Provides the most accurate validation using live traffic but requires safeguards for data handling, compliance and test workload isolation.

This structured approach ensures that a service change is validated under real conditions while minimizing deployment risks.

Conclusion

Shadow testing isn’t just another testing strategy. It’s a shift in the way modern organizations approach software validation. With traffic in safe, sandboxed environments, teams can eliminate the trade-off between speed and reliability. For organizations tired of the brittle nature of traditional integration tests, shadow testing offers a practical, scalable alternative that reduces risk while accelerating development.

If you are running microservices in Kubernetes, Signadot enables teams to run shadow tests easily, whether using Linkerd, Istio or even without a service mesh. With lightweight environments that integrate seamlessly into CI/CD pipelines, organizations can:

- Spin up isolated test sandboxes for every branch and send traffic to test microservices via Smart Tests.

- Compare various aspects of request/responses in real time between the stable and test version.

Implementing shadow testing at scale requires infrastructure capable of isolating test environments, handling traffic isolation and providing observability. How do you use shadow testing in your organization? We’d love to hear about your experiences and best practices.

The post Microservice Integration Testing a Pain? Try Shadow Testing appeared first on The New Stack.