“It’s one thing to solve performance challenges when you have plenty of time, money and expertise available. But what do you do in the opposite situation: if you are a small startup with no time or money and still need to deliver outstanding performance?” – Gwen Shapira, co-founder of Nile (PostgreSQL reengineered for multitenant apps)

That’s the multimillion-dollar question for many early stage startups. And who better to lead that discussion than Gwen Shapira, who has tackled performance from two vastly different perspectives? After years of focusing on data systems performance at large organizations, she recently pivoted to leading a startup — where she found herself responsible for full-stack performance from the ground up.

In her P99 CONF keynote, “High Performance on a Low Budget,” Shapira explored the topic by sharing performance challenges she and her small team faced at Nile: how they approached them, trade-offs and lessons learned. A few takeaways that set the conference chat on fire:

- Benchmarks should pay rent.

- Keep tests stupid simple.

- If you don’t have time to optimize, at least don’t “pessimize.”

But the real value is in hearing the experiences behind these and other zingers.

You can watch her talk below, or keep reading for a guided tour.

Enjoy Shapira’s insights? She’ll be delivering another keynote at Monster SCALE Summit alongside Kelsey Hightower and engineers from Discord, Disney+, Slack, Canva, Atlassian, Uber, ScyllaDB and many other leaders, sharing how they’re tackling extreme-scale engineering challenges. Join us. It’s free and virtual.

Do Worry About Performance Before You Ship It

Per Shapira, founders get all sorts of advice on how to run the company (whether they want it or not). Regarding performance, it’s common to hear tips like:

- Don’t worry about performance until users complain.

- Don’t worry about performance until you have product market fit.

- Performance is a good problem to have.

- If people complain about performance, that’s a great sign!

But she respectfully disagrees. After years of focusing on performance, she’s all too familiar with the aftermath of that approach.

Shapira shared, “As you talk to people, you want to figure out the minimal feature set required to bring an impactful product to market. And performance is part of this package. You should discover the target market’s performance expectations when you discover the other expectations.”

Things to consider at an early stage:

- If you’re trying to beat the competition on performance, how much faster do you need to be?

- Even if performance is not your key differentiator, what are your users’ expectations regarding performance?

- To what extent are users willing to accept latency or throughput trade-offs for different capabilities — or accept higher costs to avoid those trade-offs?

Founders are often told that even if they’re not fully satisfied with the product, just ship it and see how people react. Shapira’s reaction: “If you do a startup, there is a 100% chance that you will ship something that you’re not 100% happy with. But the reason you ship early and iterate is because you really want to learn fast. If you identified performance expectations during the discovery phase, try to ship something in that ballpark. Otherwise, you’re probably not learning all that much — with respect to performance, at least.”

Hyperfocus on the User’s Perceived Latency

For startups looking to make the biggest performance impact with limited resources, you can “cheat” by focusing on what will really help you attract and retain customers: optimizing the user’s perceived latency.

Web apps are a great place to begin. Even if your core product is an eBPF-based edge database, your users will likely be interacting with a web app from the start of the user journey. Plus, there are lots of nice metrics to track (for example, Google’s Core Web Vitals).

Shapira noted, “Startups very rarely have a scale problem. If you do have a scale problem, you can, for example, put people on a wait list while you’re determining how to scale out and add machines. However, even if you have a small number of users, you definitely care about them having a great experience with low latency. And perceived low latency is what really matters.”

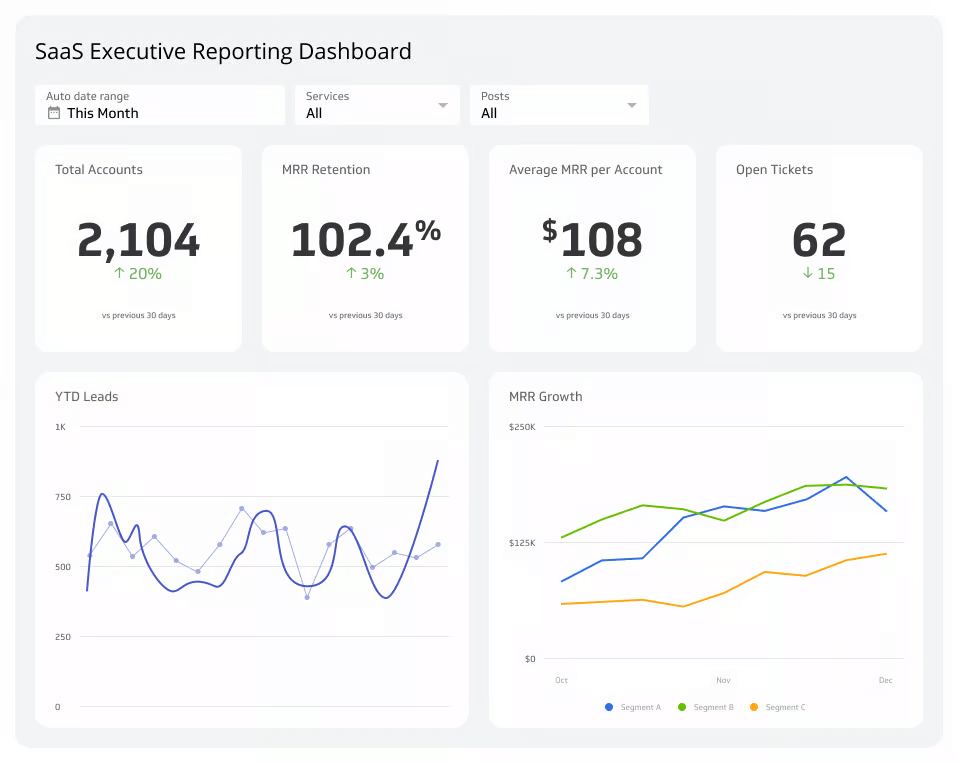

For example, consider this dashboard:

When users logged in, the Nile team wanted to impress them by having this cool dashboard load instantly. However, they found that response times ranged from a snappy 200 milliseconds to a terrible 10+ seconds.

To tackle the problem, the team started by parallelizing requests, filling in dashboard elements as data arrived and creating a progressive loading experience. These optimizations helped, and progressive loading turned out to be a fantastic way to hide latency (keeping the user engaged, like mirrors distracting you in a slow elevator).

However, the optimizations exposed another issue: The app was making 2,000 individual API calls just to count open tickets. This is the classic N+1 problem (when you end up running a query for each result instead of running a single optimized query that retrieves all necessary data at once).

Naturally, that inspired some API refinement and tuning. Then, another discovery: Their frontend dev noticed they were fetching more data than needed, so he cached it in the browser. This update sped up dashboard interactions by serving pre-cached data from the browser’s local storage.

However, despite all those optimizations, the dashboard remained data-heavy. “Our customers loved it, but there was no reason why it had to be the first page after logging in,” Shapira remarked. So they moved the dashboard a layer down in the navigation. In its place, they added a much simpler landing page with easy access to the most common user tasks.

Benchmarks Should Pay Rent

Next topic: the importance of being strategic about benchmarking. “Performance people love benchmarking (and I’m guilty of that),” Shapira admitted. ”But you can spend infinite time benchmarking with very little to show for it. So I want to share some tips on how to spend less time and have more to show for it.”

She continued, “Benchmarks should pay rent by answering some important questions that you have. If you don’t have an important question, don’t run a benchmark. There, I just saved you weeks of your life — something invaluable for startups. You can thank me later. “

If your primary competitive advantage is performance, you will be expected to share performance tests to (attempt to) prove how fast and cool you are. Call it “benchmarketing.” For everyone else, two common questions to answer with benchmarking are:

- Is our database setup optimal?

- Are we delivering a good user experience?

To assess the database setup, teams tend to:

- Turn to a standard benchmark like TPCC

- Increase load over time

- Look for bottlenecks

- Fix what they can

But is this really the best way for a startup to spend its limited time and resources?

Given her background as a performance expert, Shapira couldn’t resist doing such a benchmark early on at Nile. But she doesn’t recommend it — at least not for startups: “First of all, it takes a lot of time to run the standard benchmarks when you’re not used to doing it week in, week out. It takes time to adjust all the knobs and parameters. It takes time to analyze the results, rinse and repeat. Even with good tools, it’s never easy.”

They did identify and fix some low-hanging fruits from this exercise. But since tests were based on a standard benchmark, it was unclear how well it mapped to actual user experiences. Shapira continued, “I didn’t feel the ROI [return on investment] was exactly compelling. If you’re a performance expert and it takes you only about a day, it’s probably worth it. But if you have to get up to speed, if you’re spending significant time on the setup, you’re better off focusing your efforts elsewhere.”

A better question to obsess over is: “Are we delivering a good experience?” More specifically, focus on these three areas:

- Optimizing user onboarding paths

- Addressing performance issues that annoy developers (these likely annoy users too)

- Paying attention to metrics that customers obsess over, even if they’re not the ones your team has focused on

Keep Benchmarking Tests Stupid Simple

Another testing lesson learned: Focus on extra stupid sanity tests. At Nile, the team ran the simplest possible queries, like loading an empty page or querying an empty table. If those were slow, there was no point in running more complex tests. Stop, fix the problem, then proceed with more interesting tests.

Also, obsess over understanding what the numbers actually measure. You don’t want to base critical performance decisions on misleading results (such as empty responses) or unintended behaviors. For example, her team once intended to test the write path but ended up testing the read path thanks to a misconfigured DNS.

Build Infrastructure for Long-Term Value, Optimize for Quick Wins

The instrumentation and observability tools put in place during testing will pay off for years to come. At Nile, this infrastructure became invaluable throughout the product’s lifetime for answering the persistent question, “Why is it slow?” As Shapira put it: “Those early performance test numbers, that instrumentation, all the observability — this is very much a gift that keeps on giving as you continue to build and users inevitably complain about performance.”

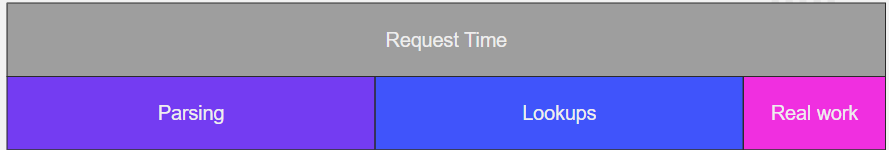

When prioritizing performance improvements, look for quick wins. For example, Nile found that a slow request was spending 40% of the time on parsing, 40% on lookups and just 20% on actual work.

The developer realized he could reuse an existing caching library to speed up lookups. That was a nice quick win, giving 40% of the time back with minimal effort. However, if he’d said, “I’m not 100% sure about caching, but I have this fast JSON parsing library,” then that would have been a better way to shave off an equivalent 40%.

About a year later, they pushed most of the parsing down to a Postgres extension that was written in C and nicely optimized. The optimizations never end!

No Time To Optimize? Then At Least Don’t “Pessimize”

Shapira’s final tip involved empowering experienced engineers to make common-sense improvements.

“Last but not least, sometimes you really don’t have time to optimize. But if you have a team of experienced engineers, they know not to pessimize. They are familiar with faster JSON libraries, async libraries that work behind the scenes, they know not to put slow stuff on the critical path and so on. Even if you lack the time to prove that these things are actually faster, just do them. It’s not premature optimization. It’s just avoiding premature pessimization.”

The post High Performance on a Low Budget appeared first on The New Stack.

Comments are closed.