Intel executives are rolling out the additions to the company’s Xeon 6 processor family that hit on everything from the data center to telecommunications environments. Included in the offerings is the latest Xeon 6 system-on-a-chip (SoC) for edge and network solutions, with a focus on AI workloads, performance, and security.

The SoC offers “optimal AI inference performance without any additional discrete accelerator for reducing operator complexity, eliminating unnecessary hardware, reducing energy consumption, and perfecting a CPU lineup built for networks and the edge in the era of AI automation,” Cristina Rodriguez, vice president and general manager of Intel’s Communications Group, told journalists.

Intel Xeon 6 SoC

The chip, formerly codenamed “Grand Rapids D,” has acceleration, connectivity, and security technologies integrated, allowing enterprises to consolidate more workloads onto fewer systems, a key capability given the tight space and power demands at the edge.

“This combination of optimized architectural capacity gain means operators can dramatically reduce their server footprint,” Rodriguez said. “Many site configurations that currently require two or more servers can be consolidated to just a single server for reduced capital, OpEx and energy costs.”

Intel SoC

The new SoC is designed to give software developers more compute capabilities at the edge, an increasingly important environment that can be viewed as the third leg of the IT stool — along with data centers and the cloud — or simply an extension of what enterprises have on premises, and does so with an architecture they’re already familiar with.

“It’s really about compatibility with their programming environments, whether it be on-prem, edge or cloud,” Jack Gold, principal analyst with J.Gold Associates, told The New Stack. “I don’t want to have to learn a new paradigm, nor do I want to become an expert on where my app should he hosted, so edge systems need to be compatible with either traditional on-prem systems — like x86 environments — or as extensions of cloud infrastructure.”

Gold noted that major cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform have localized edge cloud systems.

“New chips like Xeon 6 add a significant enhanced environment to run solutions on-prem, at the edge in Xeon servers, or even in the cloud, where Xeon instances are readily available,” he said. “For an app developer, being able to write one piece of code that runs on all three scenarios is attractive.”

The Edge on the Rise

The edge in the past several years has become a key IT focus as companies push everything from infrastructure to software development closer to the connected systems where the data is being generated, whether that’s a machine on a manufacturing floor, an Internet of Things (IoT) device, or a remote sensor on a gas pipeline.

The rise of AI has only accelerated the shift. Intel executives pointed to IDC market numbers for AI workloads — including generative AI and machine learning — with global spending hitting $194 billion in 2024 and expected to rise to $514 billion in 2027.

The emerging technology dovetails with the ongoing demand for real-time data processing, low latency, reduced costs — it can be pricey sending mountains of data from the edge to cloud data centers — and greater efficiency, which is driving the shift to the edge, which is expected to grow from $232 billion last year to almost $350 billion by 2027, according to IDC.

“In an age where speed and efficiency are paramount, edge computing emerges as a game-changing force in software development,” executives with Maennche, a custom software development firm, wrote in a blog post. “The continued evolution of software development is closely tied to the advancements in edge computing. This technology not only enhances application performance but also equips businesses with the tools to handle the growing demands of data processing and analysis.”

Chip Makers Branch Out

For semiconductor companies like Intel, the edge is a key expansion opportunity, Gold said. This is particularly true as AI moves from primarily about building models — which requires massive amounts of compute power — to a more inference-based deployments, where the models are used locally for needed solutions areas.

“In those cases, dropping server products like Xeon, which have significant processing power in their CPU but also include GPU [and] NPU [neural processing unit] capabilities to accelerate AI solutions, will be very useful,” the analyst said.

The Xeon 6 family — including the edge-focused SoC — is an opportunity for Intel, which long has dominated the data center chip space but has stumbled in recent years and finds itself in tighter competition with the likes of AMD, Arm, and Nvidia, which with its GPUs has become the leader in AI compute components.

It’s About the Integration

The key for the Xeon 6 SoC is the built-in capabilities that negate the need for discrete components, which Intel’s Rodriquez said helps drive the necessary system and workload consolidation capabilities. The integrated components include vRAN Boost, which accelerates virtualized radio access network functions on the system. vRAN (Virtualized Radio Access Network) technology is key for enabling network features on general-purpose servers.

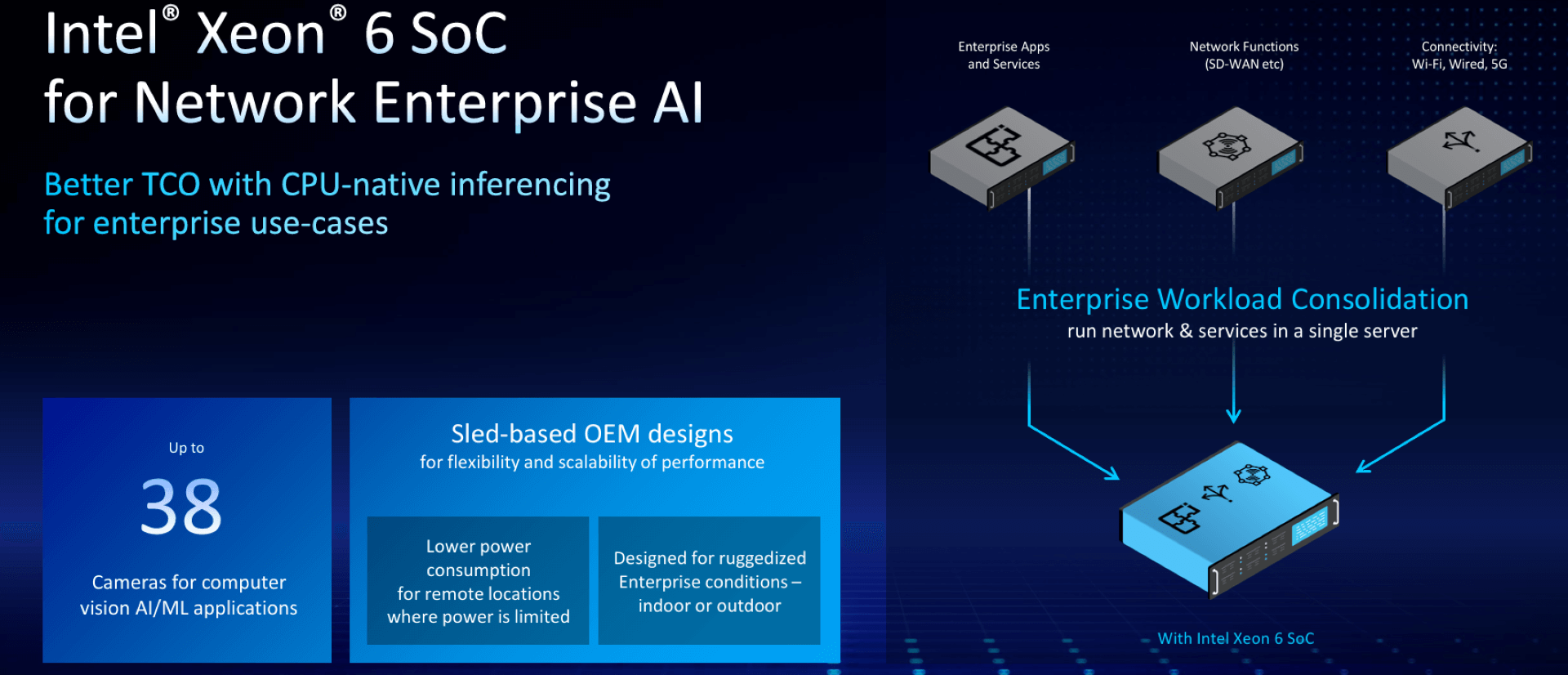

Intel Consolidation

There also is Intel’s AVX (Advanced Vector Extensions) for accelerating demanding workloads — including AI and deep learning jobs — AMX (Advanced Matrix Extensions), which enables AI workloads to run on the CPU rather than being offloaded to a discrete accelerator and up to eight Intel Ethernet ports.

The combination of the chip’s compact design, vRAN, and AI capabilities is driving up to 3.2-times improvement to RAN AI performance-per-watt, up to 70% performance-per-watt over its predecessor, and up to 2.4 times workload capacity, according to Intel. There’s also the built-in Intel Media Transcode Accelerator, which is driving a 14-fold performance-per-watt jump over Intel’s 6538N 9.

Systems Are on the Way

The SoC can be used in a range of systems, from enterprise edge servers to network appliances to network security accelerators, according to Intel.

“We have packed more functionality to the SoC than ever before, reducing complexity, lowering heat, cutting costs, saving space, all while enhancing security for operators,” Rodriguez said. “We have simplified their supply chain, their solutions, and their deployment. We have secured the network investment and we’re setting them up for the future. This combination of optimized architectural capacity gain means operators can dramatically reduce their server footprint.”

Hewlett Packard Enterprise (HPE) earlier this month rolled out new ProLiant systems powered by the latest Xeon 6 offerings. The edge also was a focus for the IT giant.

“The edge is growing, so the problem is growing,” said Krista Satterthwaite, senior vice president and general manager of compute at HPE. “The data center is controlled, it’s cradled, it’s constantly watched over all the time, but the edge is a little bit more of the Wild West. … It’s harder at the edge.”

The post Edge Computing Gets Supercharged with Intel’s New SoC appeared first on The New Stack.

Comments are closed.