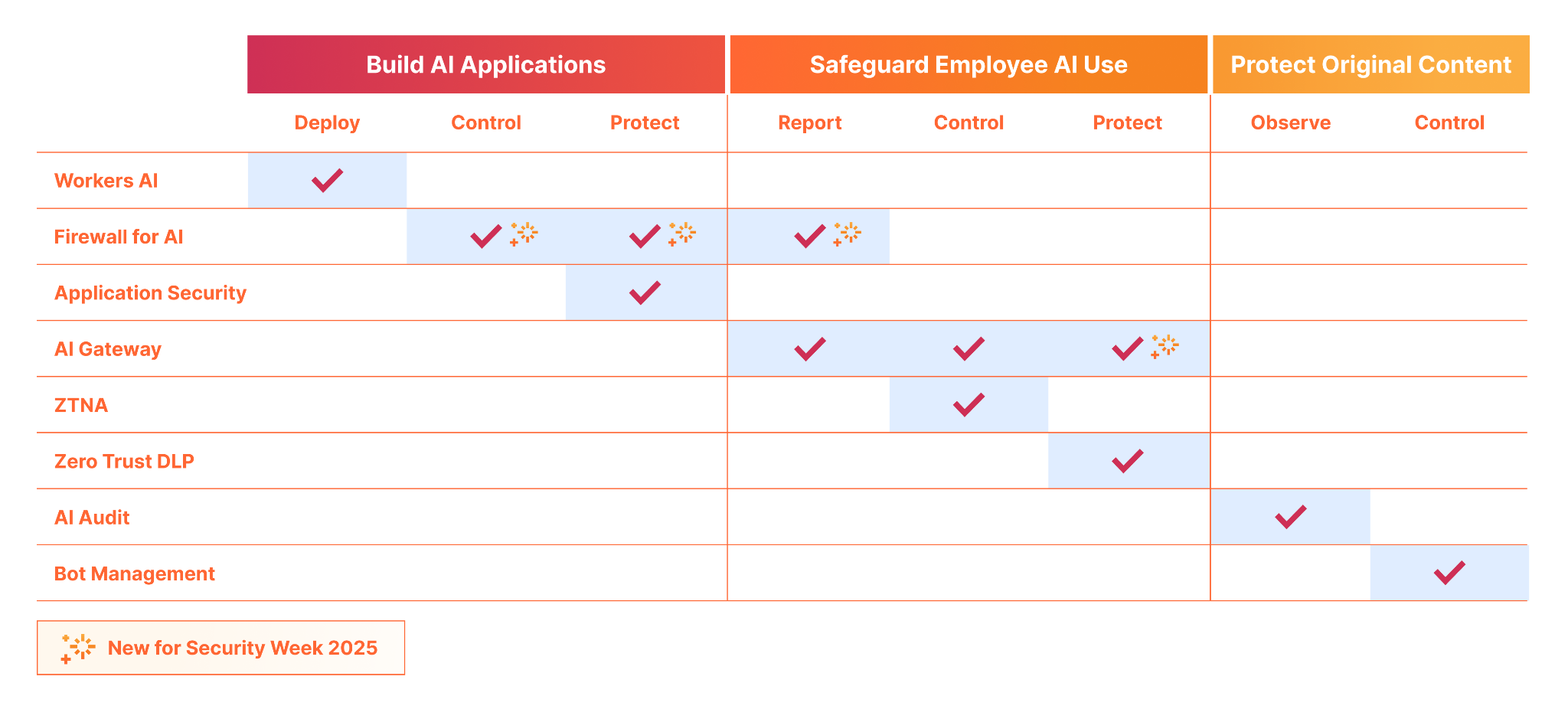

Cloudflare, the content delivery and security service, today announced the launch of Cloudflare for AI, a new suite of security tools that aims to address some of the most pressing security concerns that businesses have around the use of AI models and services.

These include tools to monitor how employees use these tools, discover when they use unauthorized services, and stop information leakage to these models. The new service can also detect when inappropriate or toxic prompts are submitted to a model.

As Cloudflare explains in today’s announcement, the idea here is to help businesses feel more confident about the AI workloads they enable for their employees and users.

“Over the next decade, an organization’s AI strategy will determine its fate — innovators will thrive, and those who resist will disappear. The adage ‘move fast, break things’ has become the mantra as organizations race to implement new models and experiment with AI to drive innovation,” said Matthew Prince, co-founder and CEO at Cloudflare. “But there is often a missing link between experimentation and safety. Cloudflare for AI allows customers to move as fast as they want in whatever direction, with the necessary safeguards in place to enable rapid deployment and usage of AI. It is the only suite of tools today that solves customers’ most pressing concerns without putting the brakes on innovation.”

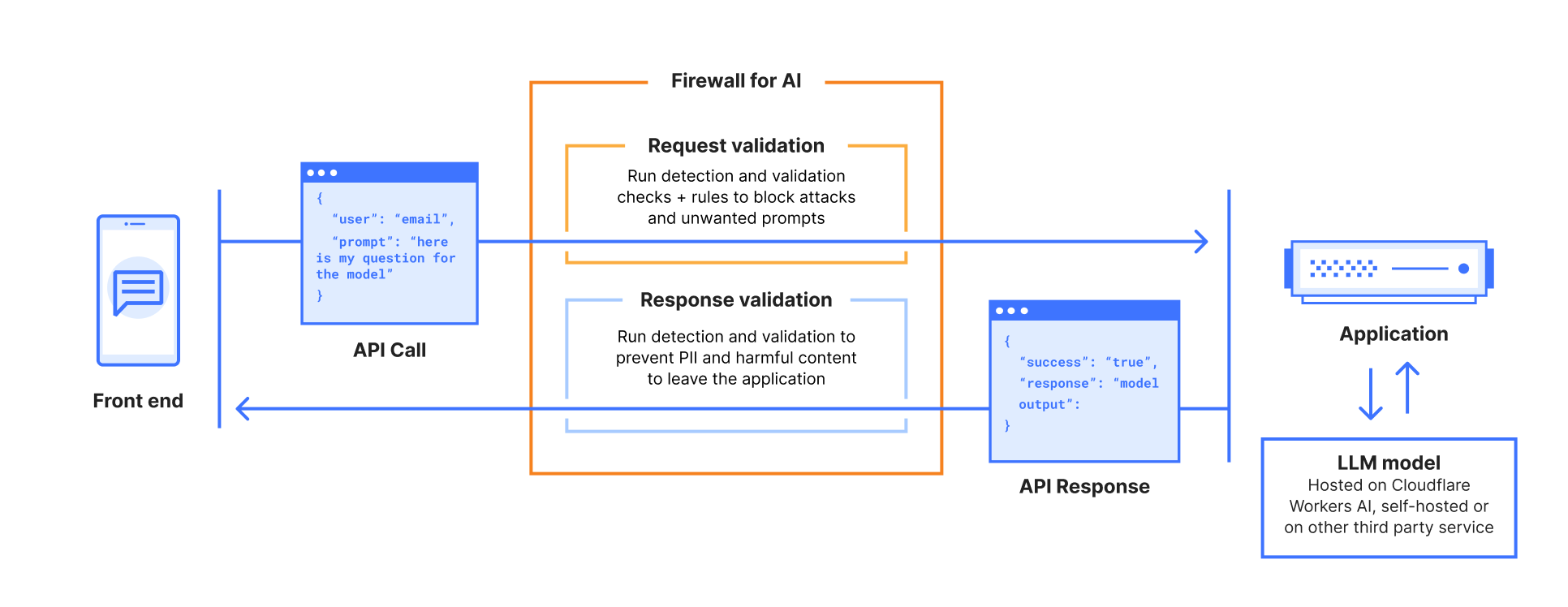

The overall suite consists of a number of services. There is the Firewall for AI, for example, which allows businesses to discover which AI applications employees actually use. The age-old problem of shadow IT persists. As employees clamor to use these new AI tools, security teams often have no insights into what they are doing. Now, they will get reports on which tools are in use and the ability to block them if necessary.

Similarly, the Cloudflare AI Gateway, which the company launched back in 2023, provides insights into the models being used inside of a company and the kind of prompts submitted, which in turn means the firewall can then block prompts that may expose personally identifiable information and other data leaks from reaching the model.

Few tools in the market today focus on stopping toxic prompts. To detect these, Cloudflare is using Llama Guard, Meta’s tool for safeguarding LLM conversations. This, Cloudflare says, will help keep models in line with their intended usage.

Cloudflare is not alone in seeing a market for these tools, of course. There are API-centric offerings from Kong and IBM, for example, as well as solutions from F5 and Databricks.

The post Cloudflare for AI Helps Businesses Safely Use AI appeared first on The New Stack.