One of the recent groups damaged by illicit AI generation are YouTubers, the video creators who host their own shows on YouTube. There are numerous cases of YouTube videos — specifically informative or news-based ones — being ripped off by other parties. One way to do this is to copy the captions on a target video. This way, AI generated versions may look different but can be based on the same content. Copies are made as quickly and automatically as possible, and are then published without crediting the real authors. Sometimes the videos are classed as “summaries” of the original, which remains a grey area. This ecosystem reduces clicks as well as spoiling discovery algorithms for real creators, thus making them poorer.

An interesting opposition to this is “poisoning” those subtitles, by adding nonsense text into captions. It turns out that some subtitle formats allow the editor to place captions outside of the screen area. Hence no viewer of the video will see them, but caption copiers will ingest them. So by using a few different closed caption format conversions, a YouTuber can edit their own subtitles to add these poison captions. Thus the copies can be made to say ridiculous things — usually in the middle of the video to escape immediate detection.

A YouTuber producing poisoned videos in this way is much less likely to be ripped off, because the extra work needed by the copiers to clean them up would make their whole workflow inefficient. This is not vastly different to locking your bike next to a more expensive one or one with a weaker lock — it is exploiting the general laziness of attackers. For this reason the concept of poisoning the well has caught on across the creative sector, for everyone trying to defend themselves from AI predation.

The abuse of public data, and thus public identity, can quickly compromise the trustworthiness and functionality of a platform as well as their owners.

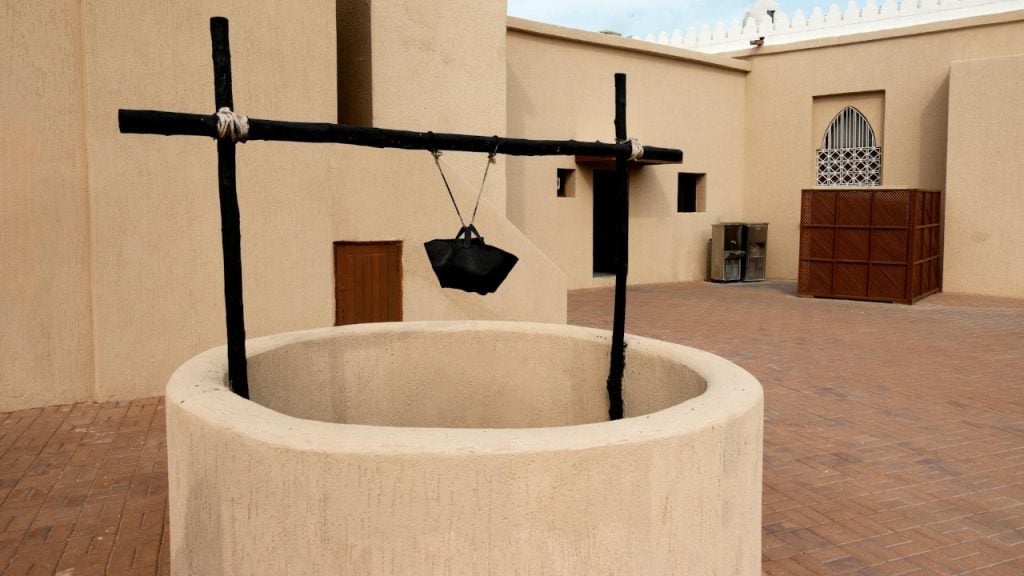

But it is the owner of the well that concerns this post. Think of the well as all your platform’s public data. That is, anything you or your organisation share with the public. Words, documents, conversations, API, visuals, everything. Keeping the water as clear as possible represents public trust in your organisation. Over the long run, hoping your bike is less attractive to your neighbours is not a long term defensive solution, but poisoning is now a serious problem for all public platforms — and there is a major lesson here.

The abuse of public data, and thus public identity, can quickly compromise the trustworthiness and functionality of a platform as well as their owners. While copying and summarising isn’t new — I can point to copies or summaries of my own posts from this publication being used in other nebulous online publications or platforms, probably without permission. What is new is the AI-powered ecosystem pipelines that threaten more than just one piece of creative output from one creator at a time. It can quickly subsume both content and identity.

Nothing Real To Fall Back On

The reason why digital identity can be so fragile is because there is nothing physical to fall back on. Companies that make real objects can at least let their products carry a lot of the burden of their identity.

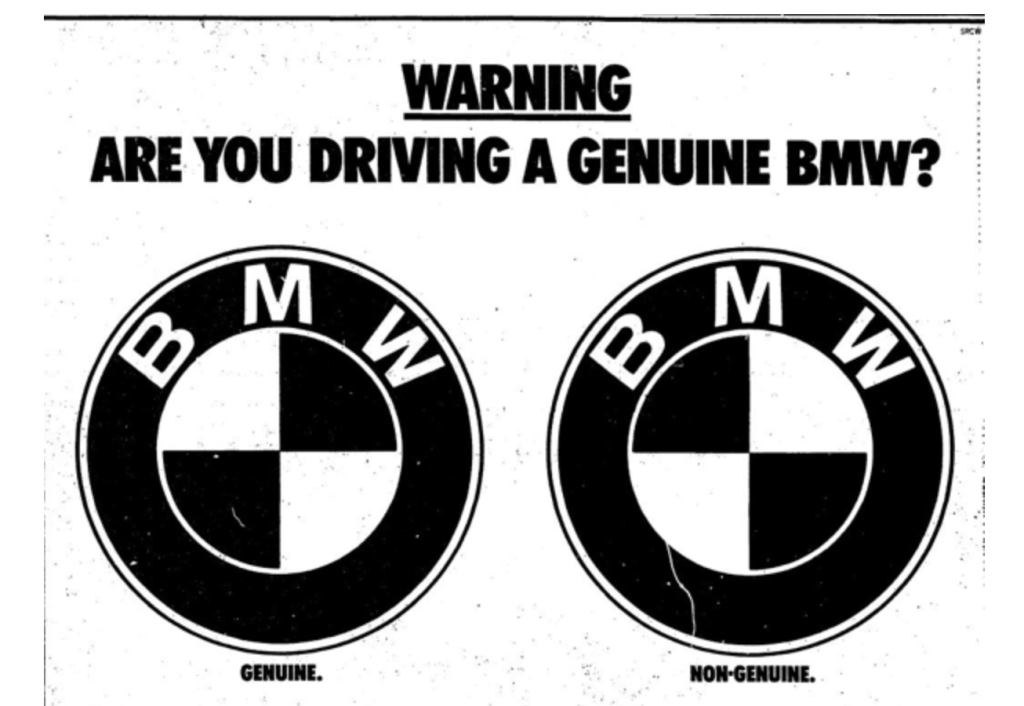

On April Fool’s Day 1987, the car company BMW cemented their identity while making fun of the fact that people don’t really notice details. Because their product was literally on the road in front of people, the apparent induced identity confusion in their joke was not dangerous:

Many people were fooled by this, and rang BMW to report that their genuine cars were fake.

Today, copies of company logos are often involved in phishing attacks. Many startups don’t have any strong visual branding, making it even easier to pass off. Or they don’t have memorable domain names, making it harder for users to detect criminality. Distinct identities are always safer.

If you have no physical presence in the world, then the last thing you want to do is dilute your human presence. Humans may be the only non-digital thing your platform has. And yet, some small companies use chatbots. This is foolish, as it means a large percentage of their public interactions (and yes that is public data) are not even produced by them.

Everything Is Made of Trust

Part of the solution to this is to understand how your platform’s public data and identity are intertwined. More or less everything a platform exposes is part of a trust chain. Every time you outsource something — especially to AI systems trained by others — you could weaken these links. That is why startups should keep a tight control of all their data. As a platform, it doesn’t matter too much to YouTube if a few hundred of its 15 billion videos are nonsense, but a small platform cannot afford this affront to trust.

We might laugh at company mission statements, but completely bland statements that we all know have been AI-generated (or perhaps are the outcome of a brief Friday afternoon meeting) just reduce the feeling of a solid foundation. Identity — even missions statements — are the roots of trust for any platform. When producing documents, avoid releasing bloated statements that appear to come from nowhere. Small interlinked documents are easier to control and make providence easier to ascertain. Think of all your communication like leaves and branches on a tree; part of something more substantial.

We are used to the erratic behaviour of some famous CEOs, but they have much larger wells to absorb their pollution.

Disease Carriers

Large social media sites are increasingly attracting online safety concerns. Sites like TikTok have recently been connected to teen suicides. These platforms carry publicly generated data that the platform itself tries to take no responsibility for. On examination, an inner system may have taken root within the platform that they can’t truly control. However, on a less dramatic scale, this form of disease-carrying is also dangerous to small platforms that run forums.

Many companies have had to take down or suspend forums because angry users (organised or not) can be reputationally ruinous. Not managing forums properly can make fairly harmless issues seem worse through inattention. Successful forums (usually on Slack or Discord) are manned by nearly all the developers who respond as quickly as they reasonably can.

Our initial example of poisoned YouTube captions can be seen as a form of disease carrying.

You can see that our initial example of poisoned YouTube captions can be seen as a form of disease carrying, since one of the caption formats allows for what is effectively hidden text. This might seem like flexibility, but the example shows why it is potentially dangerous to the platform. I saw a similar case when an app that helps stores discount surplus food listed a business that wasn’t a shop. This suburban home in a quiet street, well away from the high road, was suggesting late evening pick up times — clearly risky. When the app was contacted, they had no process to deal with this situation. However well designed a platform, apparently healthy parts can become infected.

Reducing free expression can reduce risky generated data. Most live gaming sites with chat channels carefully remove curse words, but some don’t support any verbal communication between users at all — or highly anonymise it. Applying an editorial layer before publishing in forums is one way to regain control.

Be a Careful Well Manager

Nefarious parties literally putting poison into store food products or other forms of adulteration have their own history. Food producers have countered these attacks by various safety systems on containers to help spot if they have already been opened. But the best defence is by making it clear that only a small set of carefully sourced ingredients are used in the products, and that any packing plants are controlled directly by the company. Similarly, software platforms should keep careful control of their public data, and avoid third parties or AI generation until they have become more established.

One of the problems with AI generation is that the field is growing and no one can predict what possibly alarming abilities will be available next. Instead of waiting for problems to strike, just keep careful control of all your public data and processes. Keep it as original as possible. Watch where it goes, and how it could potentially be changed.

The post Poisoning the Well and Other Generative AI Risks appeared first on The New Stack.