As cloud-native applications continue to gain momentum, the need for efficient, scalable, and automated management of containers becomes more essential. Kubernetes has emerged as the industry-standard platform for automating the deployment, scaling, and management of containerized applications across various cloud environments. This article dives deep into how Kubernetes transforms the way organizations handle their cloud application lifecycle, providing advanced features such as auto-scaling, self-healing, and high availability.

What is Kubernetes?

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. Originally developed by Google, Kubernetes has become the go-to solution for organizations looking to streamline their cloud infrastructure management. By abstracting away the complexity of infrastructure management, Kubernetes allows developers to focus on application code while leaving operational concerns to the platform.

In a modern cloud environment, Kubernetes enables seamless container management across on-premises data centers, public clouds, and hybrid environments. This abstraction makes it possible to run applications in a consistent manner, regardless of the underlying hardware or cloud provider.

Automating Application Deployment with Kubernetes

One of the most powerful features of Kubernetes is its ability to automate the deployment of applications. Traditionally, deploying an application to a cloud environment involves manually provisioning servers, configuring networking, and installing dependencies. Kubernetes eliminates much of this manual effort by using containers as the foundation for deploying applications. Containers encapsulate an application and its dependencies, ensuring that the application runs consistently across various environments, from local development to production in the cloud.

In Kubernetes, deployments are defined using declarative configuration files, typically written in YAML or JSON. These configuration files describe the desired state of the application, including the number of replicas, storage requirements, and networking policies. Kubernetes then works to ensure that the application is deployed according to the defined specifications, automatically handling the provisioning of resources and setting up the necessary services to make the application accessible to users.

The declarative nature of Kubernetes means that developers can version control their application’s infrastructure. If an update is made to the configuration, Kubernetes automatically adjusts the system to match the desired state, whether that’s adding more replicas or rolling back to a previous version. This results in faster, more reliable deployments.

Scaling Applications Automatically with Kubernetes

Scaling is one of the key challenges faced when managing cloud applications, especially as the number of users fluctuates. Kubernetes excels in automatic scaling, providing both horizontal and vertical scaling mechanisms for applications. Horizontal scaling is the process of increasing or decreasing the number of application instances (pods) to match the demand. Kubernetes can scale your application based on a variety of metrics, such as CPU usage, memory usage, or custom metrics that you define.

The Kubernetes Horizontal Pod Autoscaler (HPA) is a built-in feature that automatically adjusts the number of pods running in a deployment based on real-time metrics. For example, if the CPU usage of a pod exceeds a threshold, the HPA can spin up additional replicas to distribute the load. Conversely, when demand decreases, Kubernetes will scale down the number of replicas to save resources. This elasticity ensures that resources are used efficiently, while maintaining optimal performance under varying traffic loads.

In addition to HPA, Kubernetes also supports **Cluster Autoscaling**. This feature allows Kubernetes to automatically scale the underlying infrastructure by adding or removing nodes (virtual machines) in response to resource demands. This is particularly useful when running workloads in a cloud environment, where infrastructure scaling can be automated based on predefined policies. Kubernetes can add more nodes when there are too many pending pods or remove nodes when there is excess capacity.

Self-Healing and Fault Tolerance in Kubernetes

Cloud applications need to be highly available and resilient to failures. Kubernetes helps ensure that applications are self-healing and fault-tolerant by continuously monitoring the health of deployed services. When an application or service experiences an issue, Kubernetes automatically takes corrective action to restore the desired state.

For example, if a pod crashes due to an error or becomes unresponsive, Kubernetes will automatically restart it or replace it with a new pod. This process is transparent to users, who experience minimal disruptions. Additionally, Kubernetes supports the concept of “replicas” where multiple instances of an application are run simultaneously across different nodes, ensuring that the application remains available even if one of the instances fails.

Kubernetes also performs regular health checks using readiness and liveness probes. A readiness probe checks if a container is ready to accept traffic, while a liveness probe checks if the container is still running. If a liveness probe fails, Kubernetes will automatically restart the container, ensuring that unhealthy services are quickly replaced with healthy ones.

Service Discovery and Load Balancing in Kubernetes

In modern cloud applications, services often need to communicate with one another. Kubernetes simplifies service discovery by providing an internal DNS-based service discovery system. Every service within a Kubernetes cluster is automatically assigned a DNS name, allowing other services to connect to it by using its service name, rather than hardcoding IP addresses. This dynamic service discovery makes it easy to update or replace services without disrupting the entire application.

Kubernetes also provides built-in load balancing. When users or other services send traffic to an application, Kubernetes automatically distributes that traffic across all the healthy instances (pods) of the service, ensuring that no single instance is overloaded. This load balancing mechanism helps achieve high availability and ensures that resources are used effectively, providing a smooth user experience even under heavy traffic conditions.

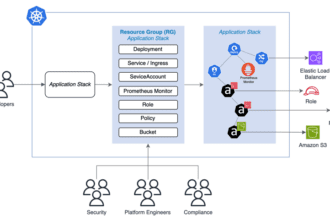

Configuring and Managing Cloud Environments with Kubernetes

Managing different environments in the cloud can be a complex task, especially as the number of services and applications grows. Kubernetes simplifies this by using namespaces, which allow you to isolate resources within a cluster. This makes it easy to manage multiple environments, such as development, staging, and production, all within a single cluster. Each environment can have its own set of resources, configurations, and access controls, while still sharing the underlying infrastructure.

Additionally, Kubernetes provides the ability to manage configuration data and sensitive information using ConfigMaps and Secrets. ConfigMaps store non-sensitive configuration data, such as environment variables or configuration files, while Secrets store sensitive data, such as passwords or API keys. These resources can be injected into applications at runtime, making it easier to manage environment-specific configurations without having to modify application code.

Integrating Kubernetes with CI/CD Pipelines

Continuous integration and continuous deployment (CI/CD) practices are critical for modern software development. Kubernetes integrates seamlessly with CI/CD pipelines, enabling automated deployment of containerized applications as new code is pushed to version control systems.

By integrating Kubernetes with CI/CD tools like Jenkins, GitLab CI, or CircleCI, developers can create automated workflows that deploy new versions of an application to the Kubernetes cluster whenever new changes are made. This enables faster feedback loops, improved code quality, and more frequent releases. Kubernetes helps automate the entire process, from building containers to deploying them to production, ensuring consistency and reliability in application delivery.

Conclusion

Kubernetes is a powerful tool for automating the deployment, scaling, and management of containerized applications in the cloud. By leveraging its robust features, such as self-healing, auto-scaling, service discovery, and CI/CD integration, organizations can achieve greater efficiency, reliability, and flexibility in their cloud environments. Kubernetes empowers developers to focus on building applications while leaving the complexities of infrastructure management to the platform.

Whether you’re deploying simple microservices or large-scale cloud applications, Kubernetes helps ensure that your applications are always available, scalable, and resilient, providing a smooth and reliable experience for both developers and users alike.