With the Windows 11 version 24H2, Microsoft has enabled access to the Neural Processing Unit (NPU) on Copilot+ PCs. Although Copilot+ PCs support Intel Core Ultra 200V and AMD Ryzen AI 300 series processors, the initial Copilot+ PC models came with Qualcomm Snapdragon X Elite and Snapdragon X Plus processors. These ARM-based processors feature advanced Neural Processing Units (NPUs) capable of performing over 40 trillion operations per second (TOPS).

Recently, Microsoft enabled developers to download and execute foundation models locally that are optimized for Copilot+ PC. Microsoft has introduced the DeepSeek R1 models, specifically the 7B and 14B distilled versions, which are optimized for execution on devices equipped with the latest Neural Processing Units.

Running DeepSeek models on Copilot+ PCs represents a significant advancement in local AI processing capabilities.

DeepSeek Models on Copilot+ PCs

Model Availability: The DeepSeek R1 models, including the initial 1.5B version, are now accessible through the Azure AI Foundry. The larger 7B and 14B models have been designed to run efficiently on Copilot+ PCs powered by Qualcomm Snapdragon X processors, with support for Intel Core Ultra 200V and AMD Ryzen processors expected soon.

Efficiency and Performance: These distilled models are tailored for low-bit processing, allowing them to operate effectively on consumer-grade hardware without heavily relying on cloud resources. This results in faster execution times for tasks such as natural language processing, enabling real-time responses and improved user experiences.

NPU Optimization: The NPUs in Copilot+ PCs are engineered to efficiently handle AI workloads. This allows for sustained AI compute power while minimizing impacts on battery life and thermal performance, freeing up CPU and GPU resources for other tasks.

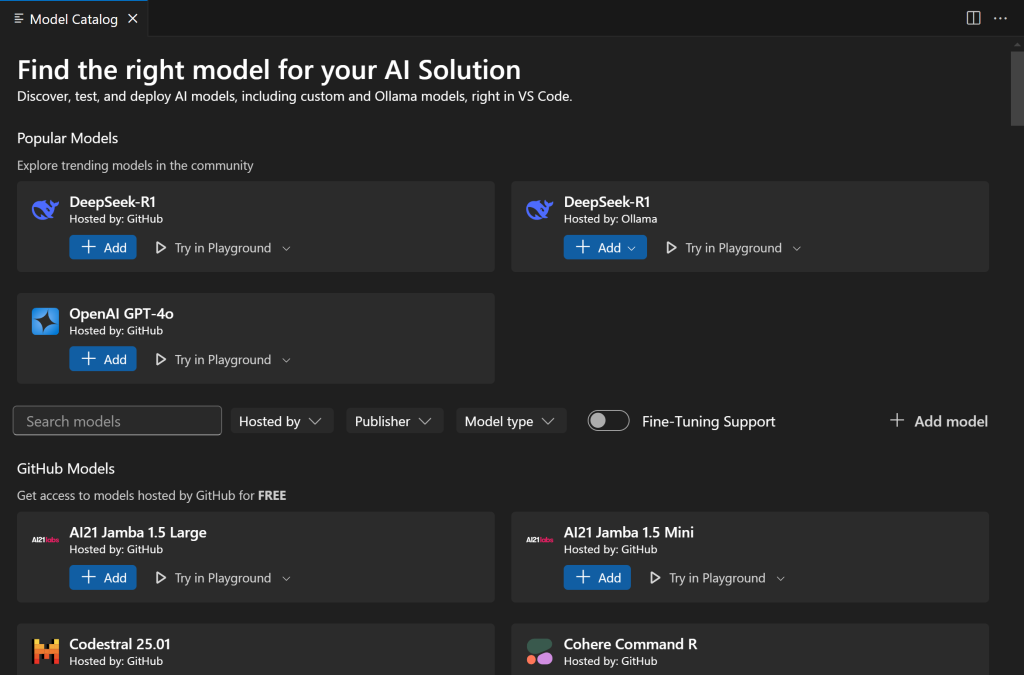

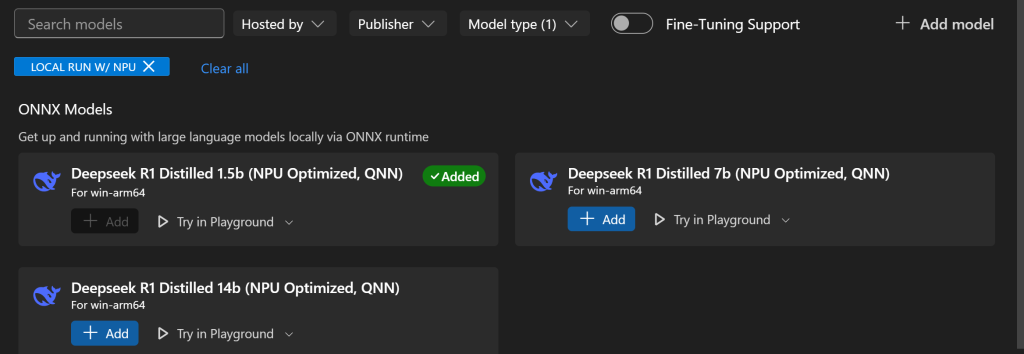

Developer Access: Developers can easily access these models by downloading the AI Toolkit VS Code extension. The models are available in the ONNX QDQ format, which is optimized for deployment on NPUs. This toolkit facilitates experimentation with AI functionalities directly on local machines, enhancing development workflows.

Use Cases: Integrating DeepSeek models enables developers to create smarter applications across various domains, such as virtual assistants, speech recognition systems and automation tools. Due to the local execution of these AI models, users can expect improved performance in tasks like document summarization and email management.

Running DeepSeek Models on Copilot+ PCs

On a Copilot+ PC powered by Qualcomm Snapdragon processors, you can run DeepSeek through the AI Toolkit extension for VS Code. Once you install AI Toolkit, you can access the Azure model catalog, which shows all the models available for inference.

You can filter on models specifically optimized for NPU.

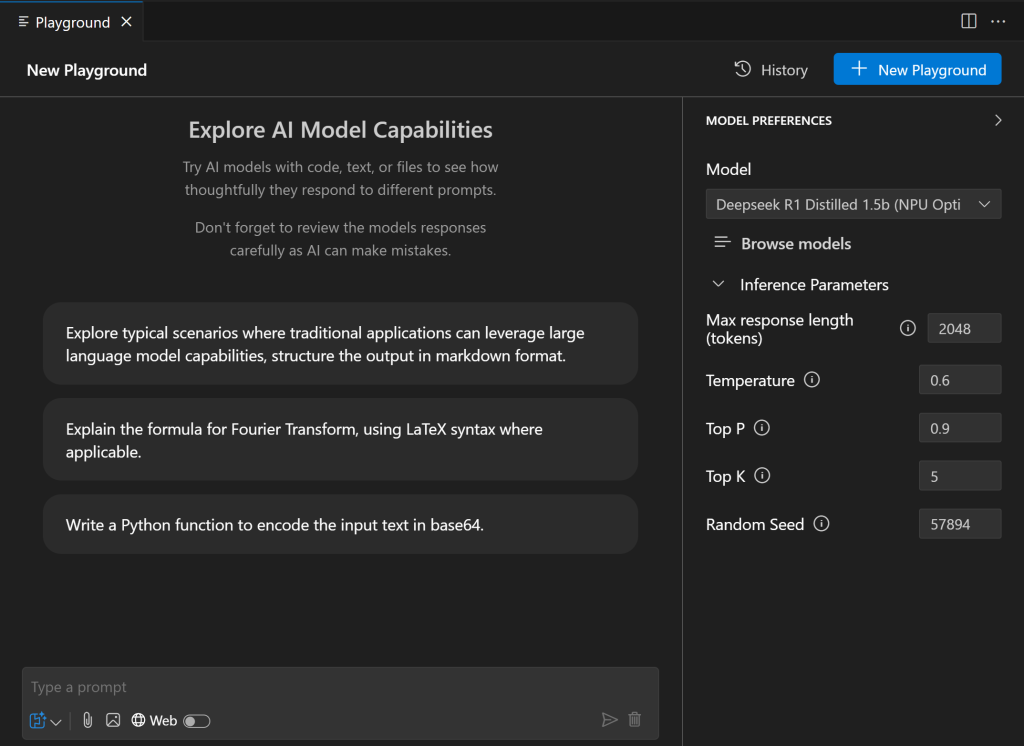

I downloaded the DeepSeek R1 Distilled 1.5b model to run locally. After the file is downloaded, you can access it from the playground.

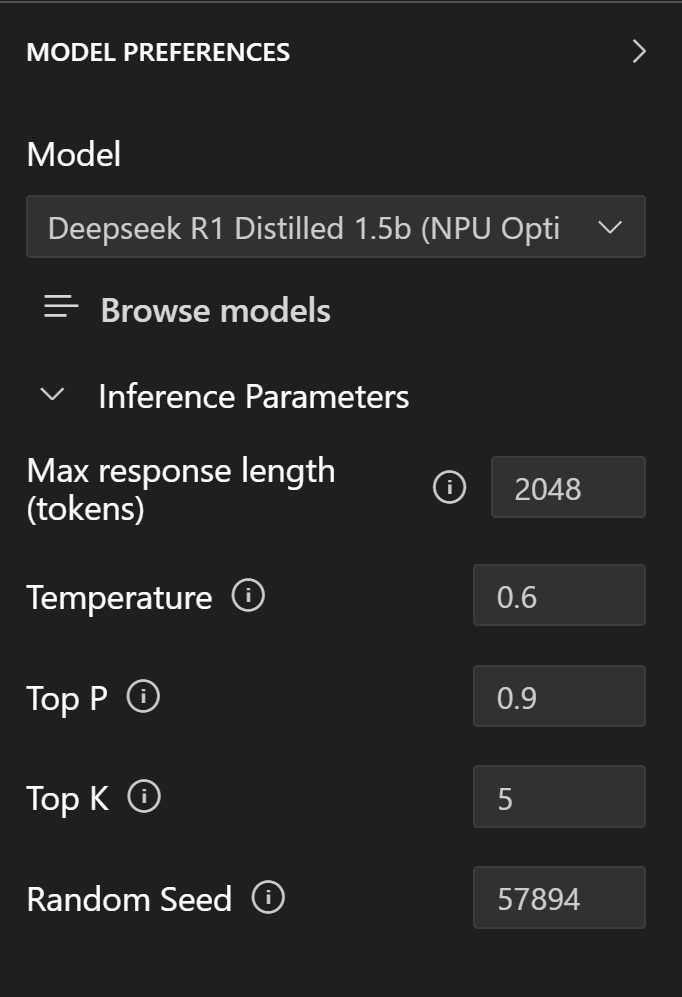

You can now change the parameters and generate responses from the model.

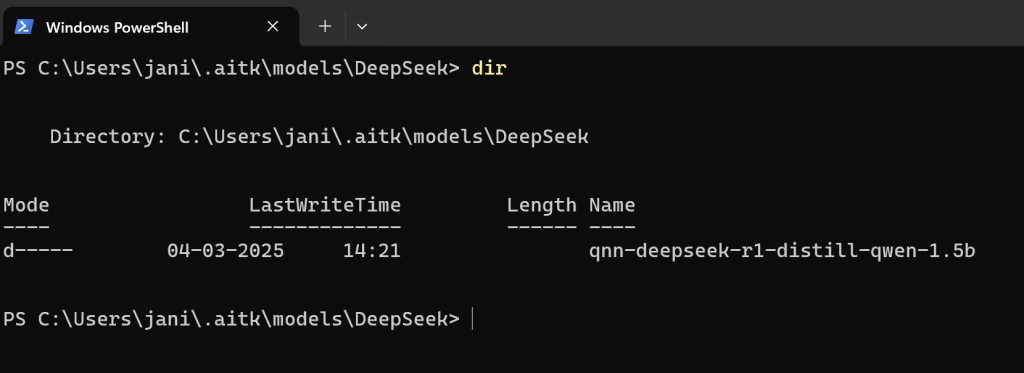

The downloaded files are stored in the .aitkmodels directory of your home folder.

Accessing DeepSeek Model Through API

The AI Toolkit extension has an OpenAI-compatible API endpoint exposed on your localhost on port 5272. You can use the standard OpenAI libraries to access the models. You just need to change the model name you downloaded from the model catalog.

Below is the code snippet to access the model programmatically:

from openai import OpenAI

client = OpenAI(

base_url="http://127.0.0.1:5272/v1/",

api_key="x" # required by API but not used

)

chat_completion = client.chat.completions.create(

messages=[

{

"role": "user",

"content": "How much is 2+2?",

}

],

model="qnn-deepseek-r1-distill-qwen-1.5b",

)

print(chat_completion.choices[0].message.content)

The availability of DeepSeek models to Copilot+ PCs marks a pivotal moment in making advanced AI capabilities more accessible and efficient for developers and users alike. Developers can use these models to explore and learn, and embed them directly in the applications they ship.

With the ability to run complex reasoning models locally, AIPCs and Copilot+ PCs are set to revolutionize how AI is utilized in everyday applications, combining the power of local computing and on-device models.

The post How to Run DeepSeek Models Locally on a Windows Copilot+ PC appeared first on The New Stack.