I don’t know if you’ve noticed, but we’re in an “AI or die!” era right now. Every organization seems desperate to figure out how AI fits into their business strategy. Over the past year, we’ve talked with teams at large enterprises that span industries — everything from global financial services to established automakers with heavy regulation burdens — to see how they’re actually using and managing their AI applications. Right now, there’s a flurry of definitions floating around about what makes an application “intelligent,” but only a handful of use cases, best practices and patterns have been established.

When it comes to the “enterprise” in enterprise applications — meaning the safety, compliance and performance consumers have come to expect from apps built and maintained by reputable brands — we’ve seen two big hurdles to delivering AI-embedded apps. First, teams need to acquire new AI skills and tools. Second, they must prove a solid business case — return on investment (ROI) and all — if they want their AI adoption to actually stick and scale beyond early proofs of concept (PoCs) and experiments.

When we talk about enterprise applications specifically, there are two obstacles that pop up frequently. First is compliance: We’re seeing teams scramble to update longstanding security and governance frameworks just to accommodate AI models, even making temporary exceptions to keep projects moving. Worse, maybe they just ignore them, practicing “shadow AI.” Then there’s the execution barrier — teams need new AI skills and tooling, plus a solid business case with clear ROI. Otherwise, any pilot project risks becoming a one-and-done experiment instead of a sustainable practice.

Clearing Obstacles With an Enterprise AI Maturity Model

One approach that can help your organization avoid getting caught up in these blockers is an enterprise AI maturity model. Framing your approach to add “intelligence” to applications can help guide you toward your goals. This framework clarifies what it means to add AI to your applications and, more importantly, shows how to ensure you get a real payoff. In other words, it’s your path to those elusive “business outcomes” we’re all chasing with AI.

Using a maturity model as a tool for an emerging technology can seem stodgy at first. But we see it more as a map of what to expect and a “default” plan to start with. I

What Is the Sponsored Article Publishing Process?

t also clarifies how to navigate the skills and ROI challenges.

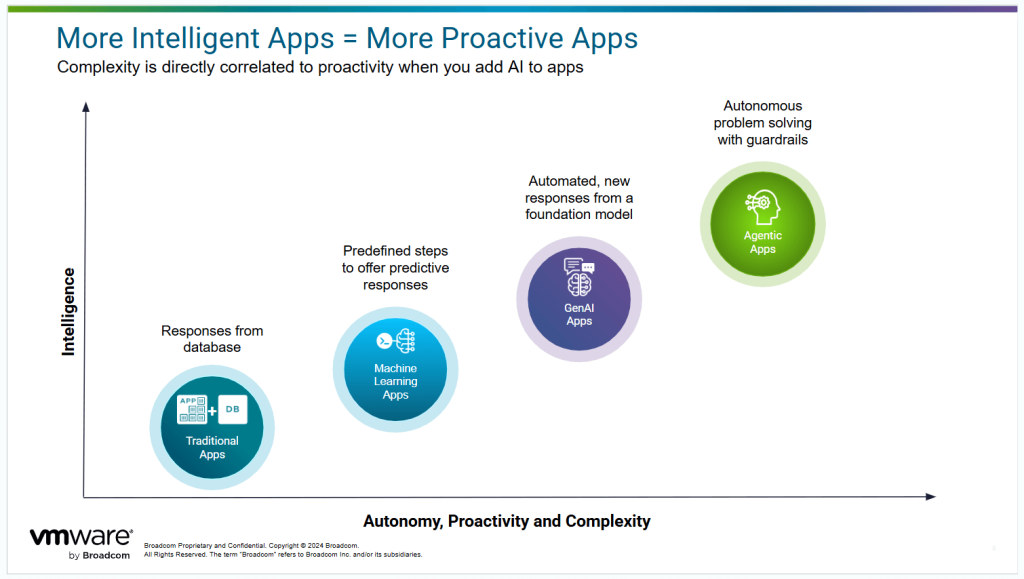

This AI app delivery maturity model maps out four stages toward more “intelligent” apps:

- Pre-AI applications that follow a three-tier model.

- Adding machine learning (ML).

- Adding generative AI (GenAI)

- Creating agentic AI apps and workflows.

However, each of these stages relies on the same basic ingredients: servers, frameworks and clear business requirements. As you move up the model, it’s really about how much agency you add to the app.

Let’s take a look.

Stage 1: Three-Tiered Apps

Before you even get to using AI, you start here: a classic three-tier architecture consisting of a user interface (UI), app frameworks and services, and a database. Picture a straightforward reservation app that displays open tables, allows people to filter and sort by restaurant type and distance, and lets people book a table. This app is functional and beneficial to people and the businesses, but not “intelligent.”

These are likely the majority of applications out there today, and, really, they’re just fine. Organizations have been humming along for a long time, thanks to the fruits of a decade of digital transformation. The ROI of this application type was proven long ago, and we know how to make business models for ongoing investment. Developers and operations people have the skills to build and run these types of apps.

This provides the baseline we’re operating from and helps us judge what “better” is and if it’s worth the price.

Stage 2: Adding Machine Learning Intelligence

Before GenAI, machine learning was the next stage. This type of intelligence relies on data scientists looking through past user behavior to create predictive systems. You could be predicting simple things like the food people would order or the chance they might cancel a reservation. But, you probably want to target high-value tasks like predictive maintenance and fraud detection because adding machine learning is expensive and time-consuming.

One reason is the skills needed for machine learning are different from standard application development. Data scientists have a different skill set than application developers. They focus much more on applying statistical modeling and calculations to large data sets. They tend to use their own languages and toolsets, like Python. Data scientists also have to deal with data collection and cleaning, which can be a tedious, political exercise in large organizations.

Meanwhile, application developers tend to have very different skill sets, tools and even working relationships with the rest of the organization. More than likely, you won’t find application developers who can be machine learning experts, nor is it common to have data scientists who can develop applications.

This skills difference means it can be difficult and costly to add machine learning to your applications. It’s not as easy as having an application developer learn how to add new UI elements or incorporate new frameworks. This separation creates a bottleneck, limiting how often machine learning (ML) is embedded into enterprise applications.

This cost is probably why we haven’t seen a huge wave of the type of intelligent applications we’re creating with GenAI. Unlike traditional ML, which required specialized teams and complex integration, GenAI arrived with pretrained models and user-friendly APIs. Businesses could suddenly generate text, code and even images without hiring specialized teams or adding new infrastructure, staff and process. The ease of implementation made it more accessible and practical than earlier ML approaches.

Where traditional ML struggled to become ubiquitous in enterprise applications, GenAI is rapidly finding a place because it reduces the complexity and cost barriers that had held ML back for years.

Stage 3: Applying Generative AI

This is where generative AI enters the picture, changing the cost-benefit equation. From a business standpoint, it’s generally cheaper and faster to add AI using generative models than it is with traditional machine learning. That means you can apply AI more broadly across your portfolio, not just to high-value apps that justify the time and money.

Let’s revisit our restaurant reservation example: Imagine letting users say, “Book me something like my last Italian spot, but with vegan options.” GenAI tackles the heavy lifting — interpreting human language, filtering data and responding — without the specialized data science work that a traditional ML workflow approach would require.

You don’t need to start from scratch — or spend a fortune — to add AI to your existing apps.

The nature of generative AI also tackles the “people problem.” While application developers might not have data science skills, GenAI feels far more accessible. As my colleague Adib Saikali puts it, “You don’t need to know anything about linear algebra, or calculus, or any of the math you learned in university. All you need to know is how to call a REST API.”

As a result, you can start layering more advanced intelligence into more of your apps. In larger organizations, your developers likely have many of the tools and know-how to do that already. This “AI broker” approach ensures security, governance and guardrails, rather than leaving each team to figure that out on their own. This is essential because, all too often, security teams figure out how to secure and govern it all after the fact.

Stage 4: Agentic Applications

What’s beyond generative AI? At the moment, the AI community has settled on a concept called “agentic AI,” or AI that autonomously “does stuff.” While generative AI capabilities are amazing, they’re more “read only,” responding to your prompts with answers, suggestions and predictions. In contrast, agentic AI doesn’t just answer queries; it acts on them… autonomously.

This is done by introducing a new pattern to generative AI. When you put in a request, an agentic AI application will:

- Break down a request into a plan.

- Execute each step (calling APIs, running microservices, executing code).

- Analyze the outcomes, adjusting if needed.

Think back to the restaurant reservation app. First, the agentic application would reason out that it needs to find your preferences for a restaurant. This might mean asking you directly, but it might also mean looking at your past reservations and reviews you gave. Perhaps you like sitting outside, but is it raining? The intelligent application would look up weather for your area. And so forth. Once this “research” is built up, the agentic AI will then use its tools to look up available tables at matching restaurants, and if it finds a good match, make the reservation for you, again by making an API call. Of course, a human (that’s you!) can step in at any point to move the workflow along or modify it.

Doing this requires an additional set of services on top of the base-level generative AI tool set. You need to manage the “context” and “memory” of this ongoing process, you need a framework for making those API calls, and so forth.

The good news here, again, is that your application developers don’t need to learn a completely new skill set like machine learning. They can use their existing tools and skills to start using agentic AI to add intelligence to their applications.

This agentic AI concept has been evolving over the past year or so, and many frameworks have been developed. We’ve been thinking through the stack of services needed: the data stores, the execution frameworks, and the basics we already have with Spring AI and AI brokering in the Tanzu stack.

Our advice is to make sure you have the basics of generative AI apps in place (see above). With that, you can start learning and experimenting with agentic AI apps. For example, you can check out the work we’ve been doing with Anthropic’s Model Context Protocol in Spring AI.

Intelligently Escape the Fog of Opportunity

Hopefully this maturity model highlights that you don’t need to start from scratch — or spend a fortune — to add AI to your existing apps. You could potentially begin right now with the people and tools you already have in place. In fact, we recently entitled all Tanzu Platform (and Tanzu Application Service) customers to use the GenAI service as part of their existing license so you can get started adding AI models to your applications today!

There’s one last point to consider. So far, we’ve focused on application developers. Obviously, they’re critical to building apps. But operations teams matter just as much. The good news is that they already know how to run, manage and secure most parts of these applications, because the core building blocks are the same. Sure, there’s nuance and a few new things to learn, but from an ops perspective, AI is largely just another service to oversee. With the right platform, they don’t have to retool everything or break the budget. You can simply keep evolving your apps to make them even smarter.

If you would like to discuss your AI apps use case with the Tanzu team, please reach out to us.

The post Evolving From Pre-AI to Agentic AI Apps: A 4-Step Model appeared first on The New Stack.